January 2025

Single Resource Optimization, Organization-Wide Efficiency Reporting, and Enhanced Node Configuration

Major Features and Improvements

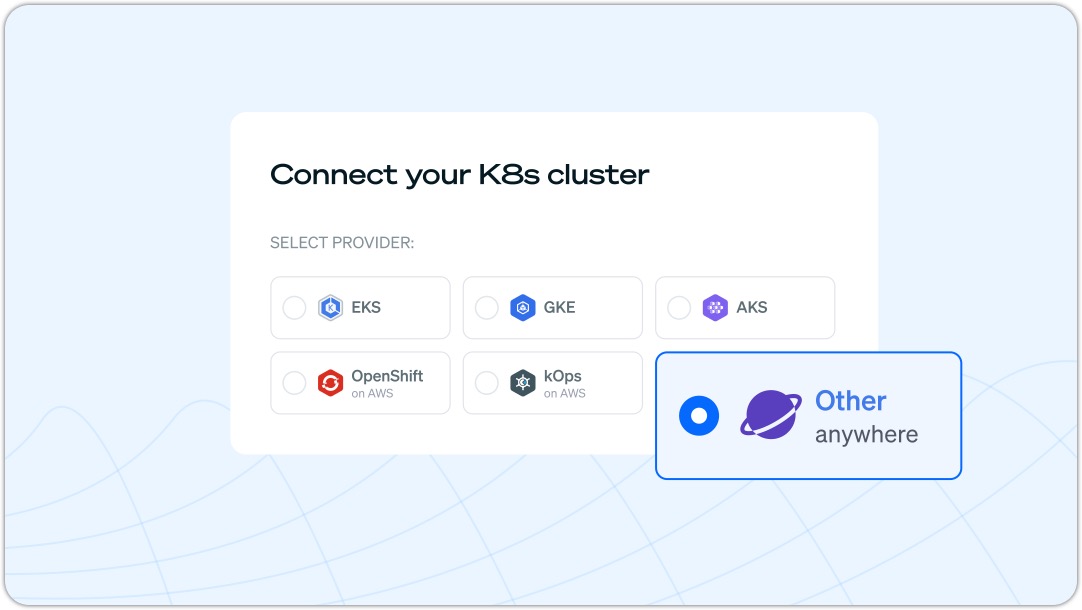

Cast AI Anywhere

We're excited to announce Cast AI Anywhere, a deployment model that brings our core optimization capabilities to any Kubernetes environment. This enables you to optimize Kubernetes clusters running in alternative cloud providers, private data centers, or on-premises infrastructure.

Key features:

- Workload optimization and resource consolidation for any Kubernetes environment

- Comprehensive cost monitoring and optimization reporting

For detailed information about deploying Cast AI Anywhere, see our Getting Started Guide.

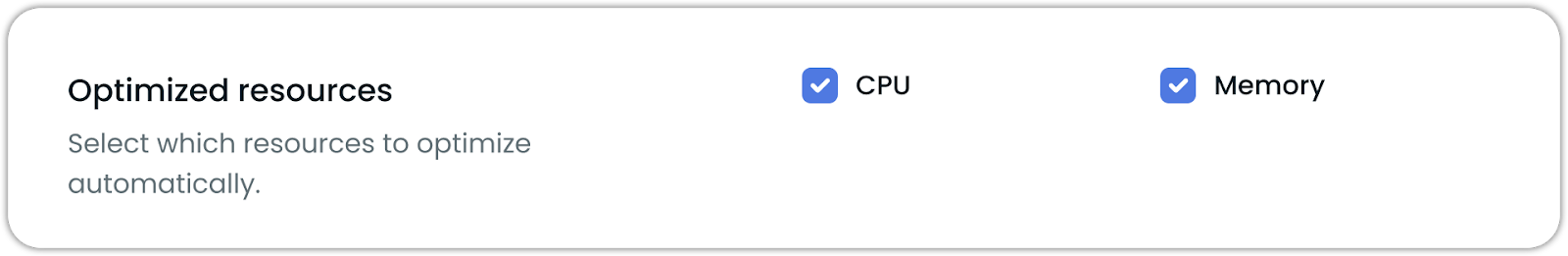

Single Resource Optimization Support

We've introduced the ability to optimize CPU and memory resources independently, giving you more granular control over workload optimization. This feature is particularly useful for applications with specific resource requirements, such as Java applications with predetermined memory allocations.

Key features:

- Choose to optimize CPU only or memory only at both policy and workload levels

- Maintain optimization recommendations for both resources while applying changes selectively

- Configure through Console, API, Annotations, and Terraform

This allows for more precise resource optimization strategies while respecting application-specific constraints. For detailed configuration options, see our Workload Autoscaling Configuration documentation.

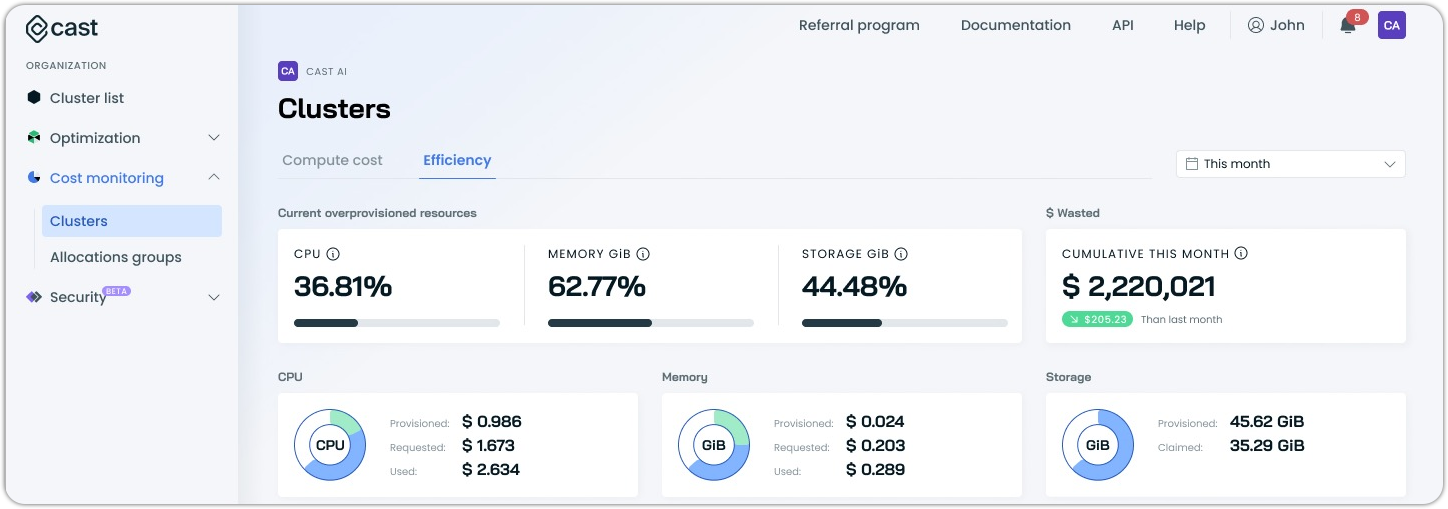

New Organization-Level Efficiency Report

The latest addition to our reporting suite brings comprehensive efficiency insights across all your clusters. This organization-wide view complements the familiar compute cost report by focusing on resource efficiency and optimization opportunities.

Key features:

- Organization-wide view of resource efficiency metrics

- Real-time overprovisioning indicators for CPU, memory, and storage

- Resource utilization analysis by instance lifecycle (On-demand, Spot, Fallback)

- Storage efficiency metrics for GKE clusters

For more information about analyzing and optimizing resource efficiency, see our Resource Efficiency documentation.

Optimization and Cost Management

Container-Level Constraints via Annotations

Workload autoscaling now supports container-level resource constraints through annotations. This allows you to set precise resource limits and requests for individual containers within a pod using Kubernetes annotations, providing greater flexibility in workload resource management.

Key benefits:

- Set container-specific resource constraints directly through annotations

- Simplify resource management for multi-container pods

Example annotation usage:

annotations:

workloads.cast.ai/configuration: |

vertical:

containers:

{container_name}:

cpu:

min: 10m

max: 1000m

memory:

min: 10Mi

max: 128MiFor detailed configuration options and examples, see our Workload Autoscaling Configuration documentation.

Enhanced Job Workload Scaling and Confidence Calculation

The Workload Autoscaler now features sophisticated confidence calculations for Jobs and CronJobs. This provides more accurate resource recommendations for periodic jobs with varying execution frequencies.

Key improvements:

- Confidence calculation is now primarily based on job execution count rather than metric volume

- Automatic detection of Jobs and CronJobs labeled as custom workloads

- Support for Jobs, CronJobs, and custom controllers that spawn Jobs when labeled as custom workloads

The system now requires a minimum of 3 job runs within the configured look-back period to achieve complete confidence, making it practical for hourly and daily jobs. We recommend using explicit autoscaling configurations through annotations for less frequent jobs rather than this new auto-discovery using the custom workload label.

For best results, we recommend configuring a separate scaling policy with an extended lookback period for Job-type workloads. For detailed configuration options and best practices, see our Workload Autoscaling Configuration documentation.

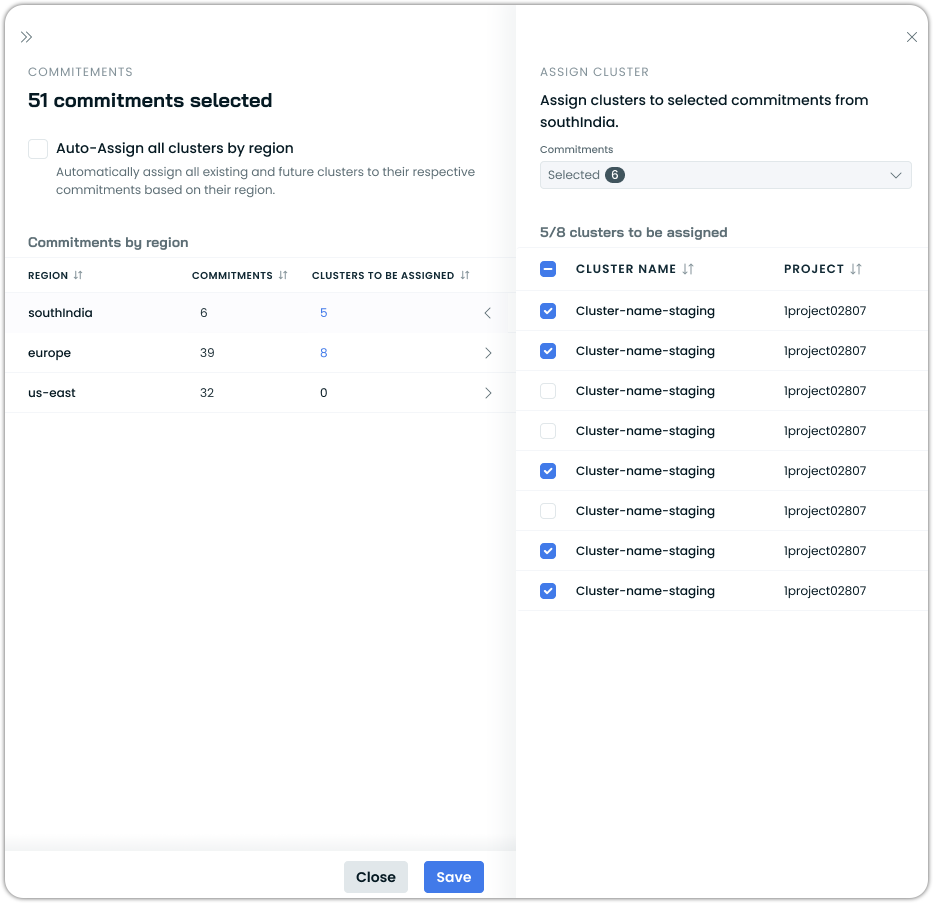

Streamlined Commitment Management with Auto-Assignment

Introducing automatic commitment assignment, designed to simplify management across large cluster deployments. This feature enables automatic commitment assignment to all current and future clusters within a specific region.

Key features:

- One-click assignment of commitments to all eligible clusters

- Automatic commitment assignment for newly created clusters

This significantly reduces the administrative overhead of managing commitments across multiple clusters, which is particularly beneficial for organizations with large-scale deployments. For more information about commitment management, see our Commitment Management documentation.

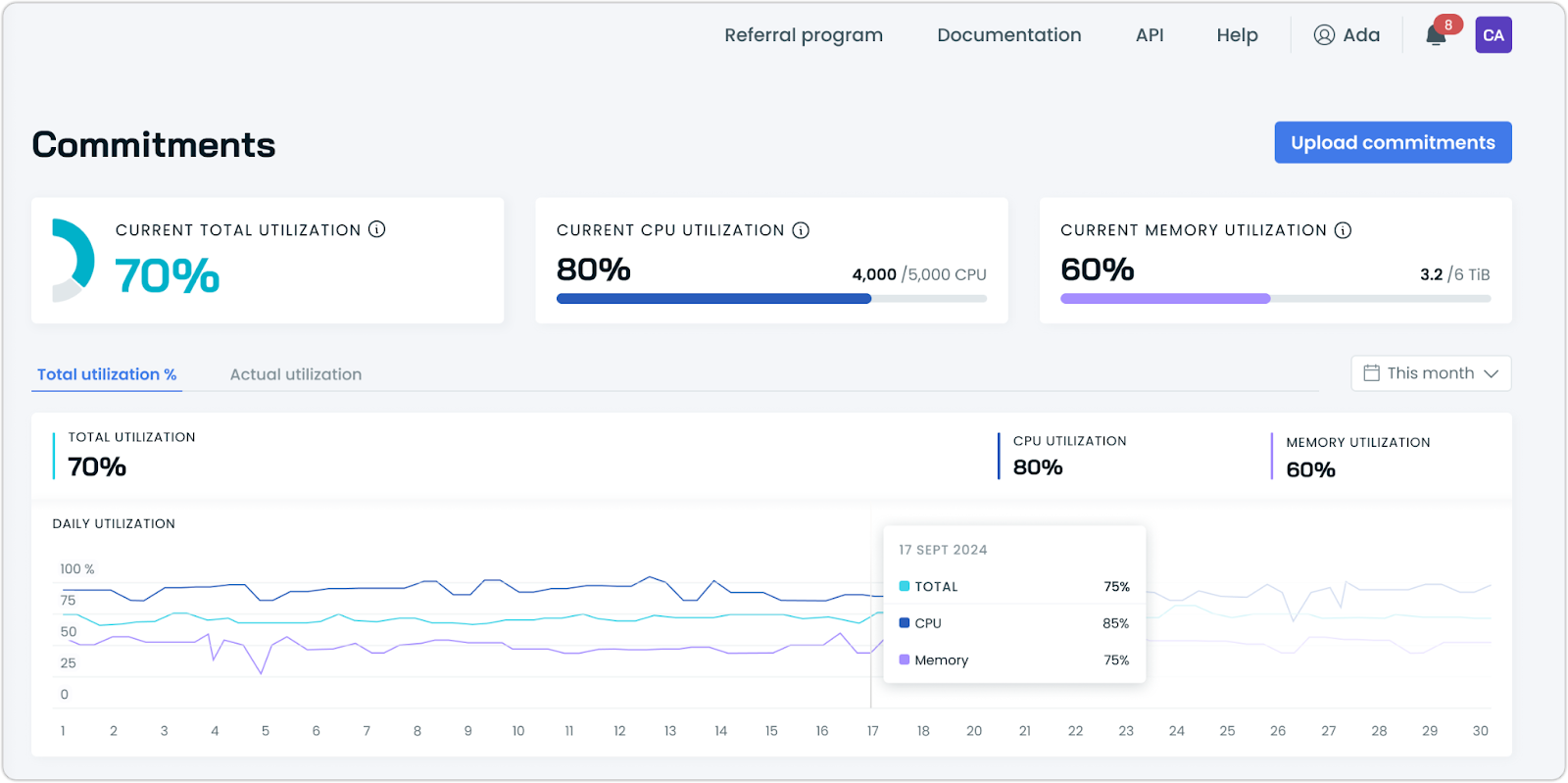

Improved Commitment Utilization Visualization

We've introduced new visualization capabilities for tracking commitment utilization, providing better insights into how your compute commitments are used over time. This new feature helps you monitor commitment efficiency and make data-driven decisions about future commitment purchases.

Key features:

- Visual timeline of total commitment utilization

- Historical utilization trends across your commitment portfolio

- Interactive graphs for detailed utilization analysis

For more information about managing and monitoring your commitments, see our Commitment Management documentation.

Enhanced Protection for Optimized Nodes

A new protection mechanism prevents premature removal of optimized ("green") nodes during paused rebalancing operations. The system applies an autoscaling.cast.ai/removal-disabled-until label with a UNIX timestamp to temporarily protect nodes from removal.

This update helps maintain cluster stability during optimization processes while ensuring efficient resource utilization. For more information about node management and rebalancing, see our Cluster Rebalancing documentation.

Improved Aggressive Mode in Scheduled Rebalancer

The Scheduled Rebalancer now supports Local Persistent Volumes (LPV) in aggressive mode operations. This provides more control over how nodes with local storage are handled during scheduled maintenance windows.

Key features:

- Optional ignore setting for Local Persistent Volumes in aggressive mode

- Configuration available for both manual and scheduled rebalancing

- Full Terraform support

For more information about configuring rebalancing operations, see our Scheduled Rebalancing documentation.

Node Configuration

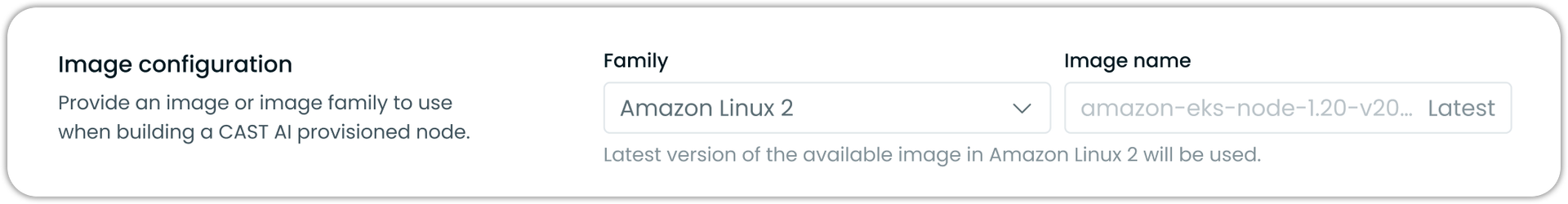

Operating System Family Selection for EKS and AKS

Node configuration options now include explicit operating system family selection for Amazon EKS and Azure AKS clusters. Users can choose from supported operating system families when configuring node templates like Amazon Linux 2, Amazon Linux 2023, Bottlerocket, and more.

Key features:

- Operating system family selection via dropdown menu

- Support for multiple OS options

For more information about node configuration options, see our Node Configuration documentation.

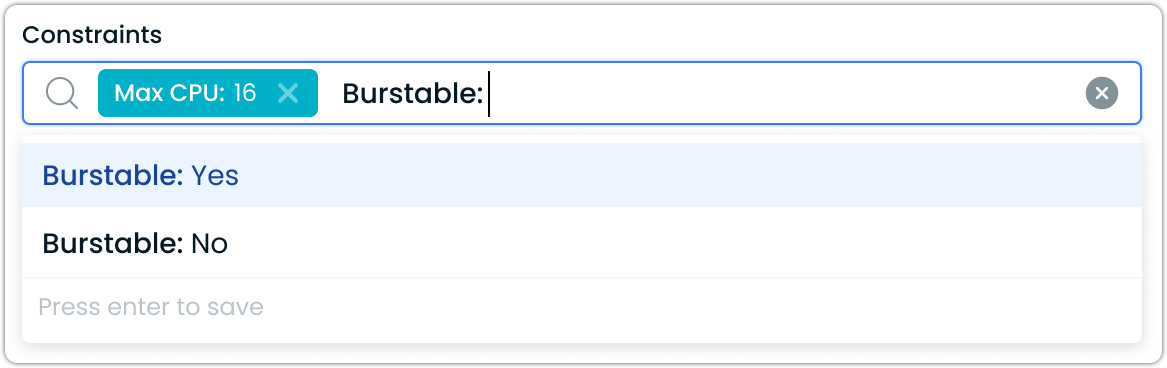

Expanded Burstable Instance Support

Burstable instance-type support now extends across all major cloud providers. While previously limited to Amazon EKS, users can now configure the burstable instance constraint for Google Cloud Platform (GCP) and Azure Kubernetes Service (AKS) clusters through node templates.

For more information about configuring node templates and instance constraints, see our Node Configuration documentation.

Also, be sure to read our highly popular blog post on Burstable vs. non-burstable instances.

Node Template Updates from Pod Mutations

Changes to node templates now trigger automatic updates across linked mutations. Any modification to a Node Template automatically refreshes all mutations linked to that template.

For more information about node templates and mutations, see our Pod Mutations documentation.

Cloud Provider-Specific Updates

EKS: IP-Based Load Balancer Target Groups

Support for IP-based target groups joins the existing instance ID-based targeting in EKS load balancer configurations. This enhancement brings greater flexibility to node registration with AWS load balancers, supporting more diverse networking architectures and use cases.

For detailed configuration instructions and best practices, see our EKS Node Configuration documentation.

EKS: Support for Karpenter v1 Migration

We've updated our Karpenter migration feature to support Karpenter v1, enabling seamless transitions from the latest version of Karpenter to Cast AI. This maintains our one-click migration capability while supporting Karpenter's newest API versions and features.

Key updates:

- Support for Karpenter v1 API objects

- Preserved support for legacy Karpenter versions

For detailed instructions on migrating from Karpenter to Cast AI, see our Karpenter Migration documentation.

Security and Compliance

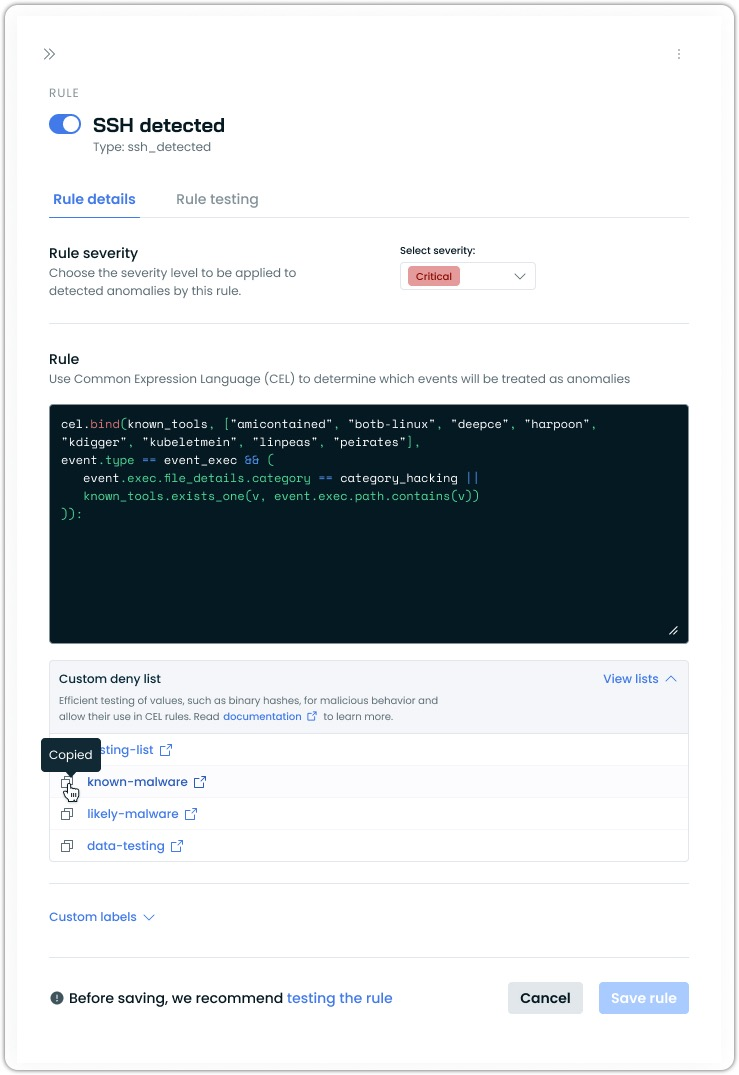

Improved Custom List Management

This release improves visualization and management capabilities for the custom list interface. Users can now clearly see rule associations and relationships between custom lists and security rules.

Key improvements:

- Visual indicators showing which rules use specific custom lists

- Direct access to associated rules from custom list entries

For more information about configuring custom lists and security rules, see our Runtime Security documentation.

User Interface Improvements

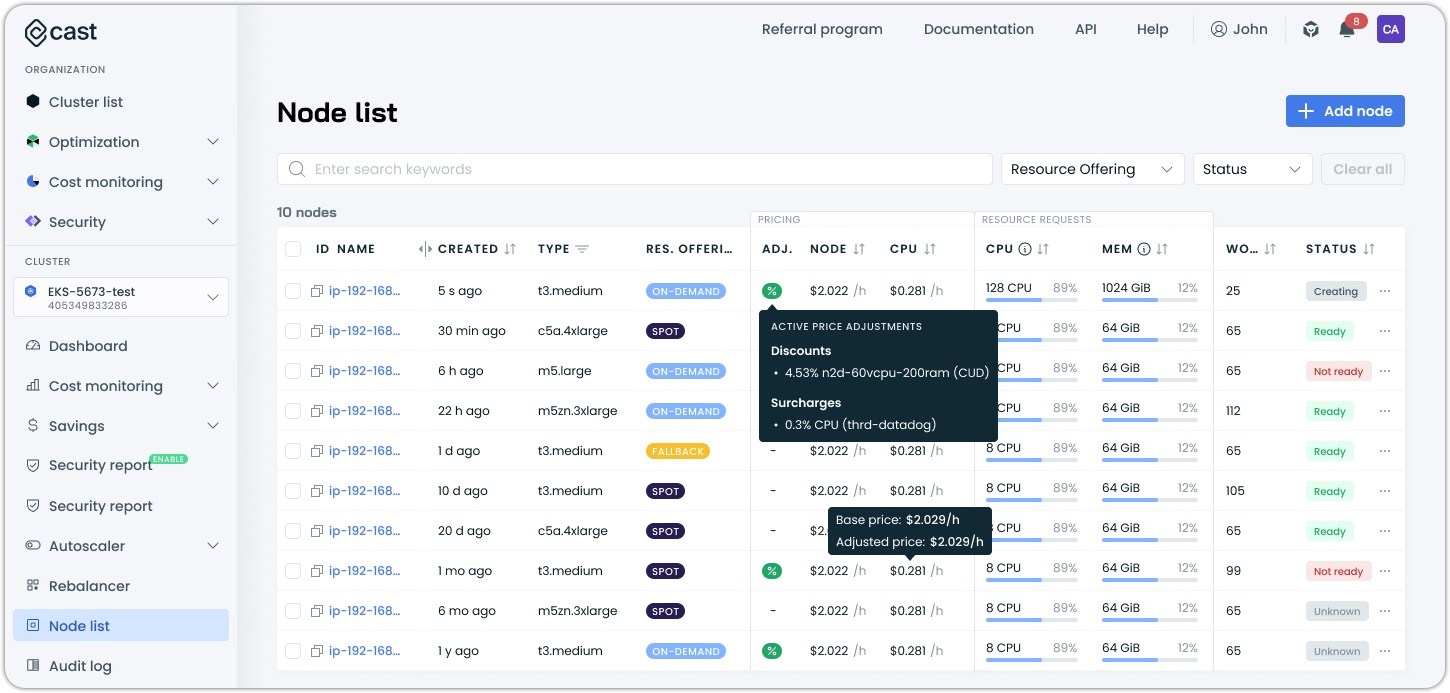

Enhanced Node Price Detail Visualization

A redesigned node list view brings comprehensive pricing information to the forefront. Users can now access detailed breakdowns of discounts and price adjustments that apply to the node, including:

- Base price and adjusted price comparisons

- Active price adjustments, including commitments and discounts

- Surcharges and other pricing modifiers (e.g., Datadog costs)

This addition provides greater transparency into how node costs are calculated and what factors affect your final pricing.

Updated Commitment Management Interface

Managing compute commitments becomes more intuitive with enhanced visual states and filtering in the commitments view. New capabilities include:

- Added visual distinction for disabled commitments with greyed-out rows

- New filter option to show enabled or disabled commitments

These improvements make tracking and managing your active and inactive commitments easier. For more information about managing commitments, see our Commitment Management documentation.

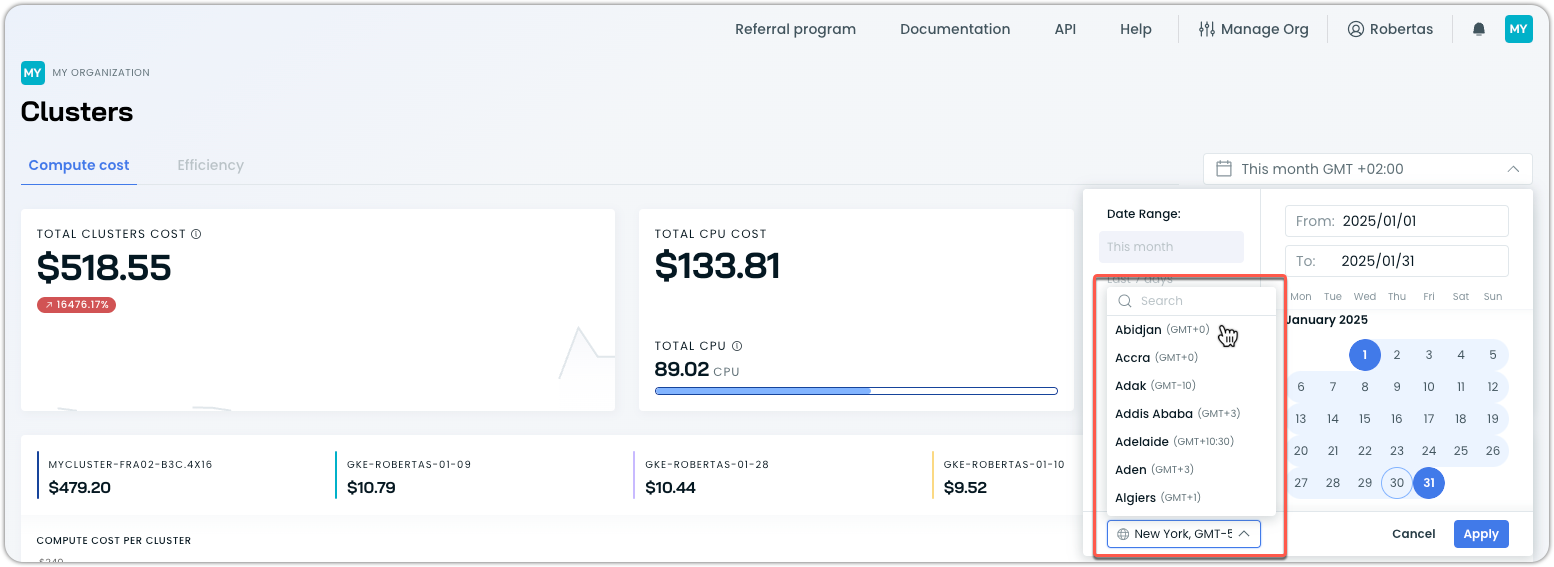

Global Timezone Setting Support

Time-based information across the Cast AI console gains flexible timezone controls. This platform-wide update provides better control over how temporal data is displayed.

Key improvements:

- New timezone selector in date pickers

- Global timezone setting for consistent time display across the platform

- Clear timezone indicators on all date and time displays

These ensure more precise time-based data visualization and better support for teams operating across multiple time zones.

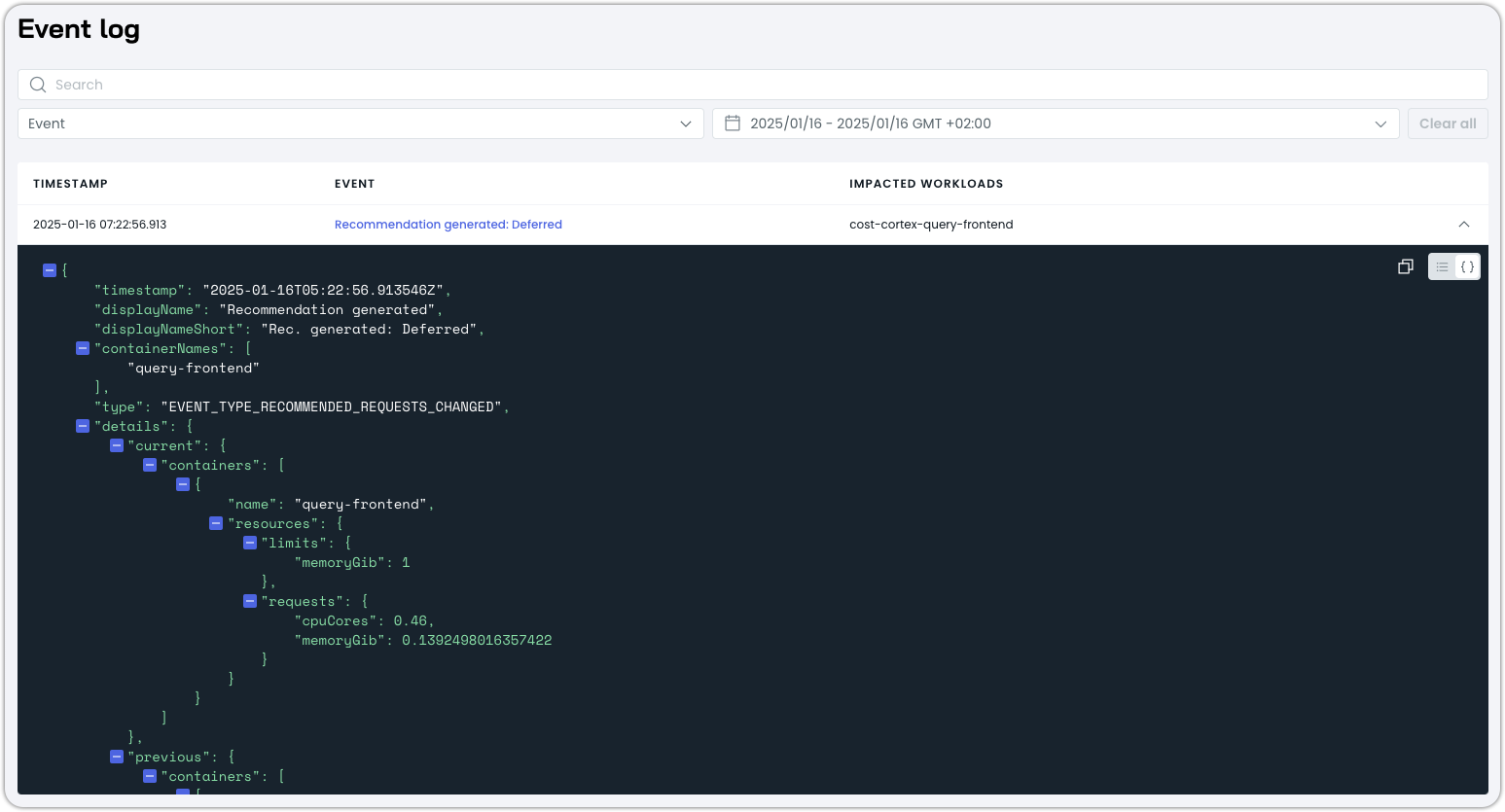

Enhanced Workload Autoscaler Event Log Visualization

The Workload Autoscaler event logs now feature an interactive JSON viewer, providing deeper visibility into event details. This new viewer allows you to explore the full event data structure with expandable sections and syntax highlighting.

These improvements make analyzing and troubleshooting workload autoscaling system decisions easier by providing access to complete event information in a more readable format. For more information, see our Event Log documentation.

API and Metrics Improvements

Improved Audit Logging for Rebalancing Operations

The audit logging system now tracks therebalancingPlanFailed events, offering better visibility into unsuccessful cluster rebalancing operations.

For more information about audit logging and rebalancing operations, see our Audit Logging documentation.

Component Updates

Cluster-Controller: Non-Root Execution Implementation

Cluster-Controller: Non-Root Execution ImplementationThe cluster-controller now runs with non-root privileges, enhancing security and compliance with industry best practices.

Key updates:

Cluster-controllernow runs with a non-root user- Maintains compatibility with security standards like Microsoft Defender for Cloud

- Full support for

MustRunAsNonRootsecurity policies

To update the cluster-controller in your cluster, please follow our upgrade documentation. To learn more about the change to this open-source component, see the GitHub pull request.

Terraform and Agent Updates

We've released an updated version of our Terraform provider. As always, the latest changes are detailed in the changelog. The updated provider and modules are ready for use in your infrastructure as code projects in Terraform's registry.

We have released a new version of the Cast AI agent. The complete list of changes is here. To update the agent in your cluster, please follow these steps.