Rebalancing

Rebalancing is a Cast AI feature that allows your cluster to reach its most optimal and up-to-date state. During this process, suboptimal Nodes are automatically replaced with new ones that are more cost-efficient and run the most up-to-date Node Configuration settings.

Rebalancing works by taking all the workloads in your cluster and finding the most optimal ways to distribute them among the cheapest Nodes.

Rebalancing utilizes the same algorithms that drive Cast AI Node autoscaling to determine optimal Node configurations for your workloads. The only difference is that all workloads are run through them rather than just unschedulable Pods.

Purpose

The rebalancing process has multiple purposes:

-

Rebalance the cluster during the initial onboarding to immediately achieve cost savings. The rebalancer makes it easy to start using Cast AI by running your cluster through the Cast AI algorithms and reshaping your cluster into an optimal state during onboarding.

-

Remove fragmentation, which is a normal byproduct of everyday cluster execution. Autoscaling is a reactive process that aims to satisfy unschedulable Pods. As these reactive decisions accumulate, your cluster might become too fragmented.

Consider this example: you are upscaling your workloads by one replica every hour. That replica is requesting 6 CPUs. The cluster will end up with 24 new nodes with 8 CPU capacities each after a day. This means that you will have 48 unused, fragmented CPUs. The rebalancer aims to solve this by consolidating the workloads into fewer, cheaper Nodes, reducing waste.

-

Replace specific Nodes due to cost inefficiency or outdated Node Configuration. During the rebalancing operation, targeted Nodes will be replaced with the most optimal set of Nodes running the latest Node Configuration settings.

Scope

You can rebalance the entire cluster or only a specific set of Nodes.

- To rebalance the whole cluster, choose Cluster > Rebalance.

- To rebalance a subset of Nodes, select them using Cluster > Node list and then choose Actions > Rebalance nodes.

After assessing the operation's scope, generate a Rebalancing plan to review planned changes and their effect on the cluster composition and costs. Only Nodes without problematic workloads will be considered for rebalancing.

To reduce the number of problematic workloads and avoid service disruption, check the Preparation for the rebalancing guide.

Execution

Rebalancing consists of three distinct phases:

- Create new optimal Nodes.

- Drain old, suboptimal Nodes.

- Delete old, suboptimal Nodes. Nodes are deleted one by one as soon as they have been drained.

Understanding Node deletion behavior

The way Cast AI removes Nodes depends on whether they contain workloads:

- Empty Nodes are deleted immediately by the autoscaler without draining. These are Nodes that have no Pods scheduled on them.

- Nodes with workloads go through a complete rebalancing cycle: Cast AI first creates new optimal Nodes, then drains the old Nodes (allowing Pods to reschedule gracefully), and finally deletes them one by one. This approach ensures workload availability throughout the rebalancing process.

Spot fallback Node handling

Rebalancing intentionally does not honor removal-disabled labels or annotations for spot-fallback Nodes. This design prevents clusters from remaining on expensive fallback instances due to operational issues.

Since workloads with Spot Instance selectors can tolerate interruptions by design, Cast AI assumes they can be safely migrated during rebalancing. To prevent prolonged reliance on fallback instances, adjust the fallback interval in your Spot Instance configuration to introduce a quiet period before attempting to move back to Spot Instances.

Rebalancing with Workload Autoscaler recommendations

Cast AI's rebalancing takes into account Workload Autoscaler recommendations in the rebalancing decision-making process. This enables your rebalancing operations to consider resource optimizations recommended by Workload Autoscaler, creating a more comprehensive approach to cluster optimization.

How it works

The rebalancing process considers both Node optimization factors and workload-level resource requirements:

- The system retrieves the current Workload Autoscaler recommendations for all workloads (pending and applied)

- These recommendations are integrated into the rebalancing plan calculation

- New Nodes are provisioned with capacity that matches both optimal Node types and the recommended resource requirements

- Workloads are rescheduled accordingly, with all previously deferred resource recommendations now applied

Considerations

Integrating Workload Autoscaler with rebalancing optimizes both Nodes and workloads simultaneously. This unified approach often leads to greater cost savings, especially when workloads are recommended for downsizing. Your cluster can also proactively adjust resources before pending Pods trigger reactive autoscaling.

Keep in mind the following:

- Potential cost increases: If Workload Autoscaler recommends resource increases, rebalancing may increase costs while improving performance and stability

- Savings threshold: When using a savings threshold with scheduled rebalancing, operations that would increase costs due to workload optimization won't execute. See scheduled rebalancing documentation.

- Version requirements: For optimal results, this integration works best with Workload Autoscaler version

0.31.0or higher. See upgrading your Workload Autoscaler version.

Configuration

You can verify the effectiveness of a rebalancing operation's taking into account of resource recommendations by monitoring your cluster's resource utilization trends after it is complete. You should see visible changes in your resource metrics, typically a reduction in provisioned and requested resources, as shown in the example below:

In this example, the rebalancing with Workload Autoscaler recommendations was executed at the point indicated by the arrows, resulting in:

- A decrease in provisioned CPUs from ~180 to ~120 CPU cores

- A reduction in provisioned memory from ~600 to ~450 GiB

These changes should persist over time rather than triggering immediate autoscaling events, indicating that the new resource allocation suits your workload requirements served by the Workload Autoscaler.

Please contact Cast AI support for assistance with enabling or configuring this feature.

Rebalancing timeout behavior

During rebalancing, Cast AI enforces a timeout to ensure the process is completed in a timely manner. A hard 80-minute timeout applies to the entire rebalancing operation, including:

- Node creation

- Node draining

- Node deletion

By default, rebalancing performs forceful draining. If a Node fails to drain within this non-configurable timeout period, it will be forcefully drained and deleted from the cluster.

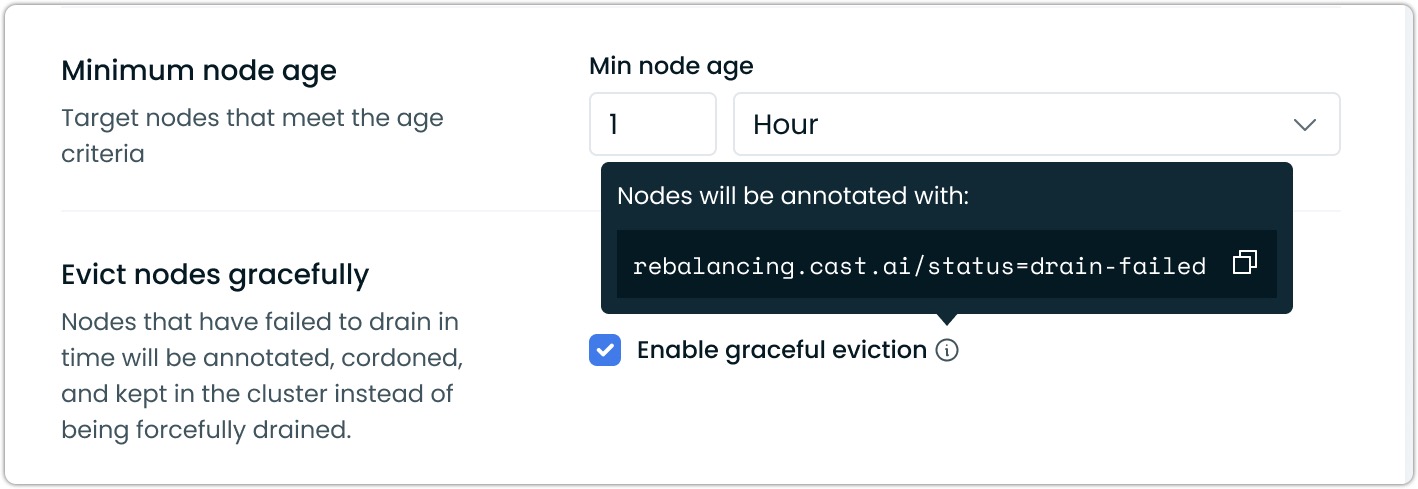

When graceful rebalancing is enabled, Nodes that fail to drain within the timeout period will be marked with the annotation rebalancing.cast.ai/status=drain-failed. The Node will remain cordoned off but not deleted from the cluster.

To enable graceful rebalancing, do the following:

For manual rebalancing:

- Go to Rebalancer > Prepare new plan

- Enable the Evict nodes gracefully toggle in rebalancing settings

- Generate and execute your plan

For scheduled rebalancing:

- Go to Rebalancer > Schedule rebalancing

- Enable the Evict nodes gracefully option in settings

- Configure and save your schedule

Graceful Node eviction enabled in a rebalancing schedule

Handling failed Node drains

If a Node becomes stuck in the draining state during rebalancing:

-

Check for blockers:

- Review Pod Disruption Budgets (PDBs) that might prevent Pod eviction

- Look for Pods that cannot be rescheduled due to resource or other constraints

- Check for Pods with local storage or Node affinity requirements

-

Manual recovery steps:

- Remove the

rebalancing.cast.ai/status=drain-failedannotation - Uncordon the Node if you want it to remain active in the cluster

- Or manually drain and delete the Node if you still want to remove it

- Remove the

Pod Disruption Budget (PDB) handling

During rebalancing, Cast AI respects Kubernetes Pod Disruption Budgets to maintain application availability. However, to prevent rebalancing operations from stalling indefinitely, Cast AI enforces a per-node drain timeout.

How PDBs are handled

When draining a node during rebalancing:

- Cast AI attempts to evict pods while respecting their PDBs

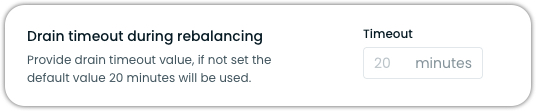

- If a pod cannot be evicted due to a PDB constraint, Cast AI waits up to the configured drain timeout (default: 20 minutes, maximum: 60 minutes) for the constraint to be satisfied

- After the timeout expires, the behavior depends on your graceful eviction setting:

| Graceful eviction setting | Behavior after timeout |

|---|---|

| Disabled (default) | Node is forcefully drained and deleted. PDBs may be violated to complete the rebalancing operation. |

| Enabled | Node remains cordoned with the rebalancing.cast.ai/status=drain-failed annotation. PDBs are never violated. |

Configuring the drain timeout

The drain timeout is configured at the Node configuration level, not within individual rebalancing operations. This ensures consistent behavior across all operations that drain nodes provisioned with that configuration.

To adjust the drain timeout:

- Go to Autoscaler > Node configuration

- Select the configuration you want to modify (or create a new one)

- Set your preferred value in the Drain timeout during rebalancing field (default: 20 minutes, maximum: 60 minutes)

Drain timeout setting in Node configuration

The configured timeout applies to all rebalancing operations affecting nodes that use this configuration.

ImportantWith the default setting (graceful eviction disabled), Cast AI may override PDBs after the drain timeout expires to complete the rebalancing operation. If your applications have strict availability requirements that must never be violated, enable Evict nodes gracefully in your rebalancing configuration.

When to enable graceful eviction

Enable Evict nodes gracefully if:

- Your applications have strict availability requirements that must never be violated

- You prefer manual intervention over automatic PDB override

- You run stateful workloads where availability guarantees are critical

With graceful eviction enabled, nodes that cannot be drained within the timeout may require manual intervention. See Handling failed Node drains for recovery steps.

Why Cast AI enforces a drain timeout

Without a timeout, a misconfigured PDB or scheduling constraint could block rebalancing indefinitely. The default 20-minute per-node timeout (configurable up to a maximum of 60 minutes) balances respecting PDBs with ensuring rebalancing operations complete in a reasonable timeframe. The default behavior (forceful drain after timeout) prioritizes completing the rebalancing operation.

ImportantIf you rely on PDBs to guarantee application availability during voluntary disruptions, enable the Evict nodes gracefully setting. With the default setting, PDBs may be overridden after the timeout to allow rebalancing to proceed.

Updated 23 days ago