Autoscaler settings

Cluster CPU limits policy

The cluster CPU limits policy allows you to set boundaries on the total amount of vCPUs available across all worker nodes in your cluster. This policy helps manage cluster scaling and resource allocation.

How it works

- When enabled, this policy keeps the cluster CPU resources within the defined minimum and maximum limits.

- The cluster will not scale down below the minimum CPU limit or scale up above the maximum CPU limit, even if there are pending Pods.

Configuring CPU limits policy

You can configure the CPU limits policy through the Cast AI Console or one of our API endpoints.

The Cast AI Console

- Navigate to Cluster -> Autoscaler -> Settings.

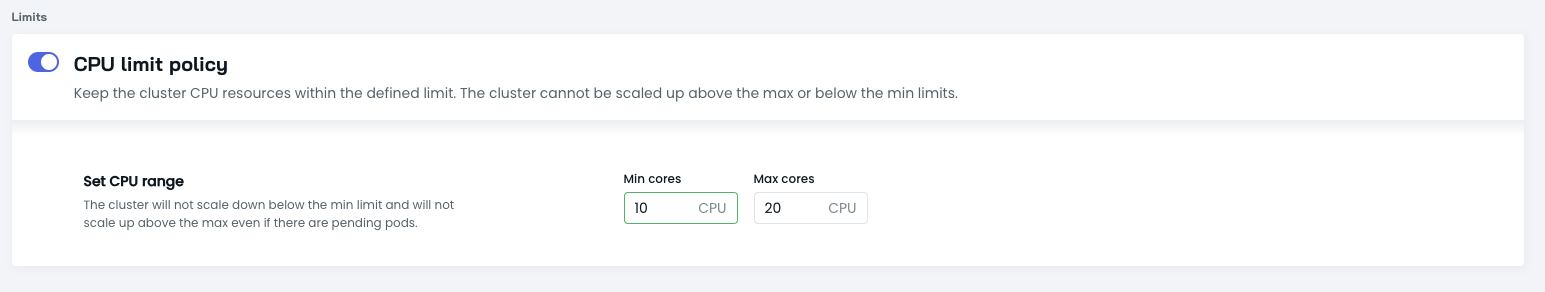

- Under Limits, toggle the CPU limit policy to On.

- Set the minimum and maximum CPU cores as needed.

CPU limit policy

API

- Use the Cast AI policies API endpoint:

/v1/kubernetes/clusters/{clusterId}/policies. - Set CPU limit values accordingly:

"clusterLimits": {

"enabled": true,

"cpu": {

"minCores": 1,

"maxCores": 20

}The new settings will propagate immediately after being applied.

Scoped mode

Scoped mode limits node autoscaling by requiring Pods to have specific tolerations to trigger scale-up, and restricting downscaling to only Cast AI-provisioned nodes.

Only Pods that tolerate the scheduling.cast.ai/scoped-autoscaler taint will cause the autoscaler to add new nodes, and only nodes with the provisioner.cast.ai/managed-by=cast.ai label can be removed during downscaling.

When to use scoped mode

Scoped mode is useful in scenarios where:

- You want to maintain existing node groups while autoscaling only Cast-managed infrastructure

- You're gradually migrating from traditional node groups to Cast AI node templates

- You need to ensure certain workloads remain on specific, non-Cast-managed nodes

- You want to prevent the autoscaler from downscaling manually provisioned nodes

How scoped mode works

When scoped mode is enabled:

- Downscaling operations will only target nodes that have the label

provisioner.cast.ai/managed-by=cast.ai - The

default-by-castainode template receives an additional taint:scheduling.cast.ai/scoped-autoscaler:NoSchedule - Pods must tolerate this taint to be scheduled on nodes created from the default template

- Custom node templates do not automatically receive this taint and must be configured manually if needed

- Existing Cast AI-managed nodes are not automatically tainted when scoped mode is enabled

- Manually provisioned nodes and existing node groups remain untouched by autoscaling operations

This ensures that:

- Only nodes created and managed by Cast AI participate in autoscaling

- Existing node groups and manually managed nodes remain stable

- You maintain full control over which parts of your infrastructure are autoscaled

Enable scoped mode

Scoped mode can only be configured via the Cast AI API or Terraform and is not available through the Cast AI console.

Use the policies API endpoint to enable scoped mode:

{

"nodeDownscaler": {

"enabled": true

},

"isScopedMode": true

}Configure Pods for scoped mode

When scoped mode is enabled, Pods that should be scheduled on autoscaler-managed nodes must include the following toleration:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-application

spec:

template:

spec:

tolerations:

- key: "scheduling.cast.ai/scoped-autoscaler"

operator: "Exists"

effect: "NoSchedule"

# Additional Pod configurationPods without this toleration will not be scheduled on autoscaler-managed nodes and will remain on your existing node groups or manually provisioned nodes. The autoscaler will also not consider Pods lacking this toleration when deciding to scale up the cluster and add nodes for more capacity, even if these Pods are unschedulable.

Existing workloadsWhen enabling scoped mode on a cluster with existing Cast AI-managed nodes, those nodes are not automatically tainted. You must update existing Pods with the appropriate toleration before the nodes are replaced or recreated with the scoped-autoscaler taint.

Identify which nodes are managed by Cast AI

You can identify which nodes are managed by the autoscaler by checking for the Cast AI management label:

kubectl get nodes -l provisioner.cast.ai/managed-by=cast.aiNodes without this label will be excluded from autoscaling operations when running in scoped mode.

Verify scoped mode operation

To verify that scoped mode is working correctly:

-

Check autoscaler policy configuration:

curl -H "X-API-Key: $CASTAI_API_TOKEN" \ "https://api.cast.ai/v1/kubernetes/clusters/$CLUSTER_ID/policies"Look for

"isScopedMode": true. -

Verify nodes are tainted:

kubectl get nodes -l provisioner.cast.ai/managed-by=cast.ai -o json | \ jq '.items[].spec.taints[] | select(.key=="scheduling.cast.ai/scoped-autoscaler")'

Disable scoped mode

When disabling scoped mode, the scheduling.cast.ai/scoped-autoscaler:NoSchedule taint is not automatically removed from the default-by-castai node template. You must manually remove the taint after disabling scoped mode.

{

"nodeDownscaler": {

"enabled": true

},

"isScopedMode": false

}After disabling scoped mode, manually remove the taint from your node templates to restore normal scheduling behavior.

Do not remove the scoped-autoscaler taint manuallyManually removing the taint on the

default-by-castainode template without disabling scoped mode risks improper node downscaling, potentially deleting non-Cast AI nodes.

Combine scoped mode with Evictor

Scoped mode can be used independently for the autoscaler or in combination with Evictor's scoped mode.

- Autoscaler scoped mode only: Autoscaler provisions and downscales only Cast AI-managed nodes, but Evictor can bin-pack workloads across all nodes

- Evictor scoped mode only: Evictor bin-packs only Cast AI-managed nodes, but the autoscaler can provision nodes for any workload and downscale any node

- Both in scoped mode: Complete isolation where both autoscaler and Evictor only affect Cast AI-managed nodes

For Evictor (via Helm):

helm upgrade --install castai-evictor castai-helm/castai-evictor -n castai-agent \

--set dryRun=false,scopedMode=trueFor more information on Evictor's scoped mode and configuration, see Evictor documentation.

Updated 3 months ago