AI Enabler settings

Learn how to configure AI Enabler settings to control LLM routing behavior, optimize costs, and manage prompt data.

The AI Enabler Settings page provides a centralized interface for configuring how the Cast AI Enabler Proxy handles your LLM requests. These settings are accessible through the API, but this guide will focus on the intuitive Cast AI console UI that makes managing your AI Enabler settings more straightforward.

Accessing the Settings page

To access the AI Enabler Settings page:

- Log in to the Cast AI console

- Navigate to AI Enabler in the sidebar menu

- Select Settings from the submenu

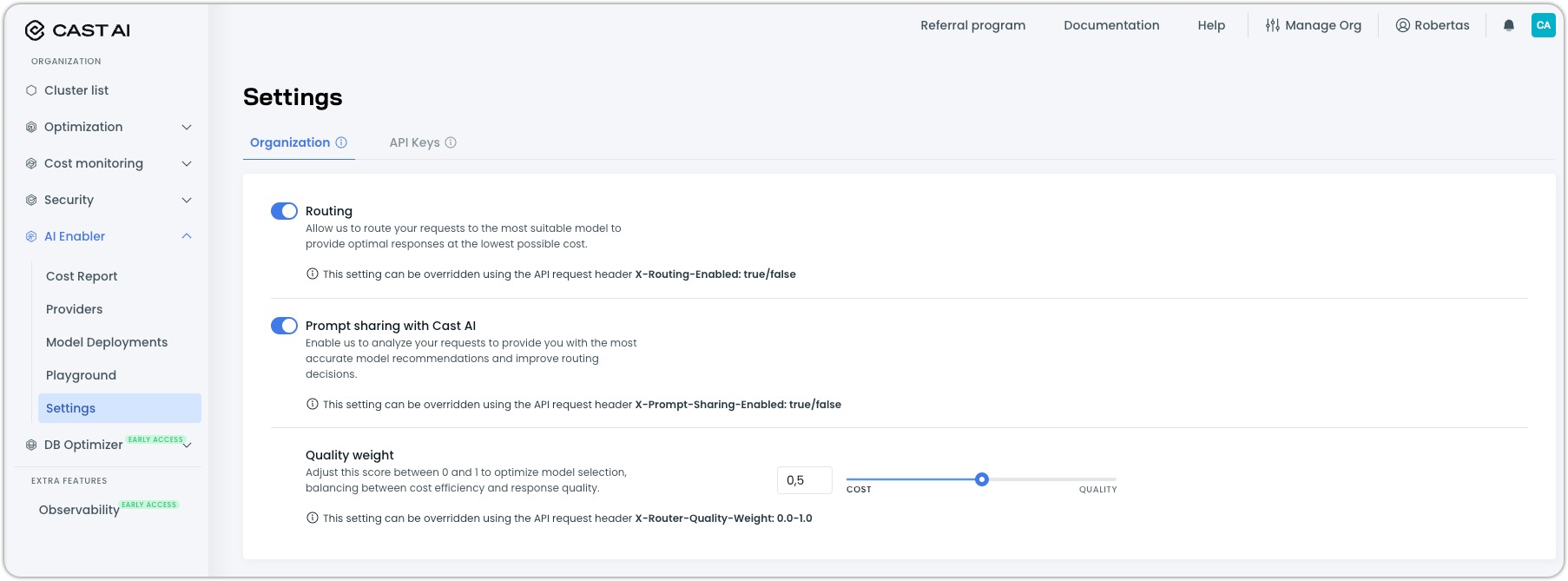

The settings page has two tabs, Organization and API Keys, which allow you to configure settings at different levels.

Organization settings

The Organization tab contains global settings that apply to all LLM requests sent to AI Enabler, unless overridden at the API key level or through request headers.

Routing

Routing is the core function of the AI Enabler that allows Cast AI to direct your requests to the most suitable LLM model for your application.

-

Enable Routing: Toggle this setting on to allow AI Enabler to route your requests to the optimal model based on complexity, performance, and cost considerations. When disabled, requests will only be proxied to the model specified in the request.

-

How it works: When enabled, Cast AI analyzes each request and routes it to the most suitable model that balances quality, performance, and cost efficiency. For example, simple requests might be sent to more affordable models, while complex requests are routed to more capable models with more advanced reasoning capabilities.

-

API Header Override: This setting can be overridden on a per-request basis using the API request header

X-Routing-Enabled: true/false

Prompt sharing

Prompt sharing allows Cast AI to store your prompts to improve routing accuracy and model recommendations.

-

Enable Prompt Sharing: This setting is turned on by default to allow Cast AI to store your prompts to help improve routing decisions and provide more accurate model recommendations.

-

Privacy Considerations: When enabled, Cast AI will store your prompts to improve the routing algorithm. No personally identifiable information is extracted or stored separately. When disabled, Cast AI will only store the request metadata.

-

API Header Override: This setting can be overridden on a per-request basis using the API request header

X-Prompt-Sharing-Enabled: true/false

Quality weight

Quality weight helps you balance cost efficiency and response quality by adjusting the routing algorithm's priorities.

Adjust the slider between Cost and Quality to set your preferred balance. Moving toward Cost prioritizes less expensive models, while moving toward Quality prioritizes models with higher capabilities.

The default setting is 0.5, representing an equal balance between cost and quality.

Organizations focused on minimizing costs might prefer settings closer to the Cost end, while those prioritizing response quality for complex tasks might prefer settings closer to the Quality end.

- API Header Override: This setting can be overridden on a per-request basis using the API request header

X-Router-Quality-Weight: 0.0-1.0(where0.0represents maximum cost efficiency, and1.0represents maximum quality)

Settings hierarchy and override priority

Cast AI implements a hierarchical structure for AI Enabler settings, where more specific configurations override broader ones. Understanding this priority order is crucial for effectively managing your AI Enabler behavior:

- Request Headers (Highest Priority): Settings specified in request headers override everything else for that specific request

- API Key Settings (Medium Priority): Settings configured for a specific API key override organization settings for all requests using that key

- Organization Settings (Lowest Priority): These global settings serve as the default when no overrides are present

This hierarchy ensures that you can configure appropriate defaults at the organization level while maintaining the flexibility to customize behavior for specific API keys or individual requests as needed.

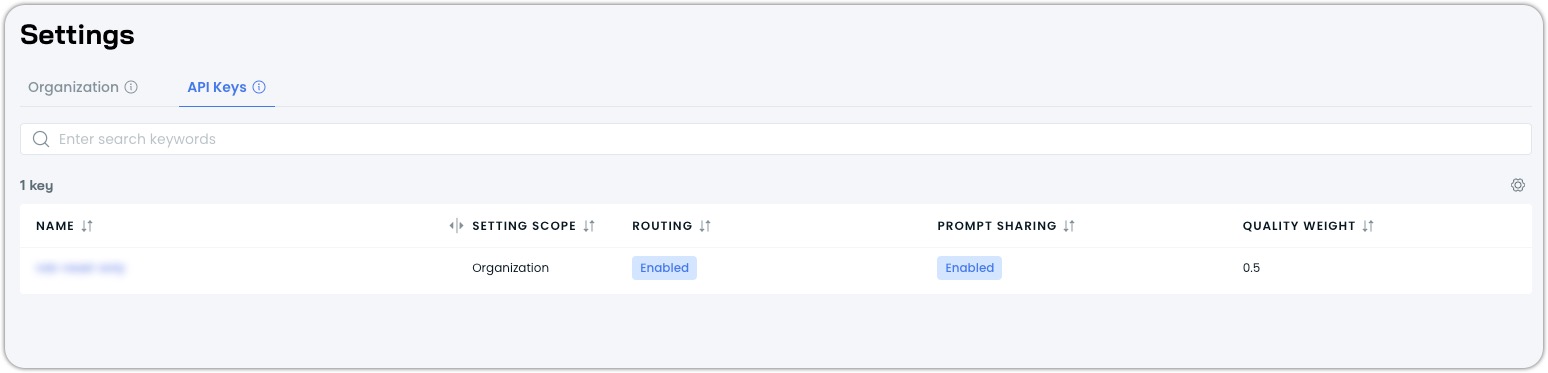

API key settings

The API Keys tab allows you to configure settings specific to individual API keys, which will override the organization-level settings for requests made with those keys.

The table displays all API keys in your organization and their current settings. Per-key settings always override organization settings regardless of the key's scope, but can still be overridden by request headers.

For more information on setting up users, access keys, and service accounts, see Role-Based Access Control.

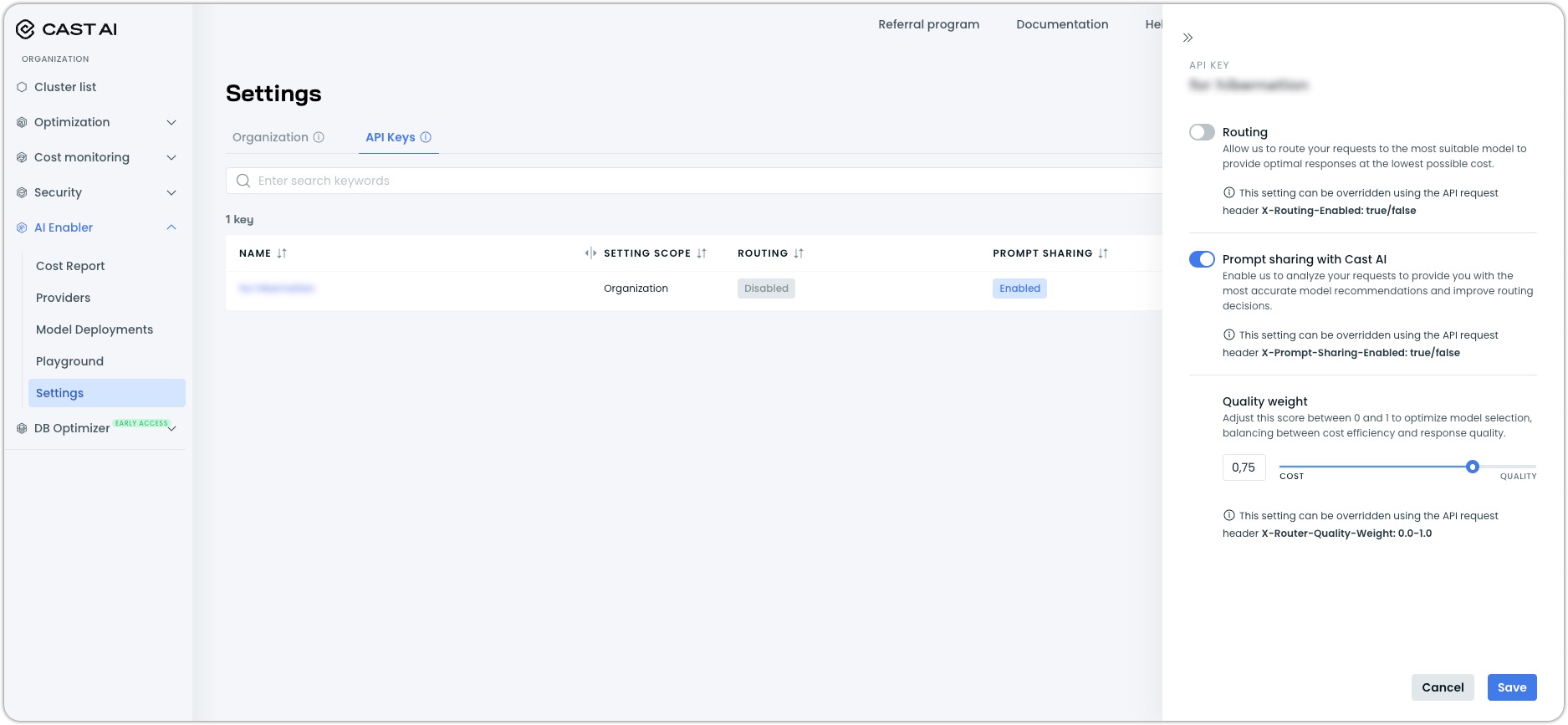

Managing API key settings

To adjust settings for a specific API key:

- Click on the API key name in the table

- A settings drawer will open, allowing you to modify settings for that particular key

- Make your desired changes to routing, prompt sharing, or quality weight

- Save your changes

These per-key settings will override the organization-level settings for any requests made using this specific API key, giving you fine-grained control over how different keys behave.

Service account token scopes

Service account tokens in Cast AI can be scoped at two levels: organization or cluster. For AI Enabler, the token scope affects which providers and models are accessible, and how usage is tracked.

Understanding token scopes

| Token scope | Description | Supported model types |

|---|---|---|

| Organization-scoped | Grants access to all AI Enabler resources across your organization. This is the default scope for service accounts and is required for SaaS provider models. | SaaS providers (OpenAI, Anthropic, etc.) and hosted models |

| Cluster-scoped | Restricts access to AI Enabler resources associated with a specific cluster. Use this for stricter environment separation, such as different tokens for staging versus production. | Hosted/self-hosted models only |

SaaS provider models (OpenAI, Anthropic, Google Gemini, Mistral, and others) always require organization-scoped tokens because they operate at the organization level. Cluster-scoped tokens are only supported for hosted model deployments running in your Kubernetes clusters.

NoteTo create a cluster-scoped service account, navigate to Manage organization > Access control > Service Accounts and select specific cluster access when configuring the service account. See Creating service accounts for detailed instructions.

Behavior with cluster-scoped tokens

When you use a cluster-scoped token with AI Enabler, the following behaviors apply:

Provider filtering

Requests made with cluster-scoped tokens only have access to providers and models associated with that specific cluster. For hosted model deployments, this means:

- You only see hosted models deployed to that cluster

- Provider lists returned by the API are filtered to show cluster-relevant options

- Attempts to access models from other clusters will fail

Usage tracking

The AI Enabler proxy correctly attributes usage to the appropriate cluster when using cluster-scoped tokens. This ensures accurate cost reporting and analytics per cluster.

Settings endpoint

The settings endpoint (/v1/llm/settings) works with both organization-scoped and cluster-scoped tokens. When using a cluster-scoped token:

- Settings queries return the configuration relevant to that cluster

- Settings updates apply to the cluster context

Fallback model behavior

When a hosted model has a SaaS provider configured as a fallback, the fallback continues to work even when using cluster-scoped tokens. This is because:

- The primary request uses your cluster-scoped token to access the hosted model

- If the hosted model is unavailable (hibernating, scaling, or erroring), the system routes to the fallback

- The SaaS fallback operates at the organization level, using the organization context associated with your cluster

This ensures service continuity without requiring separate organization-scoped tokens for fallback scenarios.

NoteFallback routing to SaaS providers is transparent to your application. The cluster-scoped token handles authentication, and the system manages the fallback routing internally.

API Reference

For developers looking to override settings programmatically, here are the available request headers:

| Header | Type | Values | Description |

|---|---|---|---|

X-Routing-Enabled | Boolean | true/false | Override the routing setting for a single request. |

X-Prompt-Sharing-Enabled | Boolean | true/false | Override the prompt sharing setting for a single request. |

X-Router-Quality-Weight | Float | 0.0 to 1.0 | Override quality weight for a single request. |

X-Provider-Name | String | Provider name | Route the request to a specific registered provider. The provider must belong to your Cast AI organization. |

Remember that header overrides have the highest priority and will take precedence over both API key settings and organization settings for the specific request.

Troubleshooting

Settings changes not taking effect

Check the following:

- Look for request headers that might be overriding your settings

- Verify the API key you're using doesn't have overriding settings that conflict with your expectations

- Verify the API key you're using is scoped to the correct resource (cluster, organization)

- Allow a few minutes for changes to propagate

Remember the priority order: Headers → API Key Settings → Organization Settings. A higher-priority setting will always override a lower-priority one.

For additional assistance with AI Enabler, contact Cast AI support or visit our community Slack channel.

Updated about 2 months ago