Cast AI components troubleshooting

This guide provides solutions for resolving specific issues with Cast AI components.

Upgrading the agent

For clusters onboarded via console

To check the version of the agent running on your cluster, use the following command:

kubectl describe pod castai-agent -n castai-agent | grep castai-hub/library/agent:vYou can cross-check our GitHub repository for the number of the latest version available.

To upgrade the Cast AI agent version, please perform the following:

- Go to Connect cluster.

- Select the correct cloud service provider.

- Run the provided script.

In case of an error when upgrading the agent, e.g. MatchExpressions:[]v1.LabelSelectorRequirement(nil)}: field is immutable run the command kubectl delete deployment -n castai-agent castai-agent and repeat step 3.

The latest version of the Cast AI agent is now deployed in your cluster.

For clusters onboarded via Terraform

By default, Terraform modules do not specify the castai-agent Helm chart version. As a result, the latest available cast-agent Helm chart is installed when onboarding the cluster, but as new agent versions are released, re-running Terraform doesn't upgrade the agent.

We are looking to solve this, but a short-term fix would be to provide a specific agent version as TF variable agent-version and reapply the Terraform plan. For valid values of the castai-agent Helm chart, see releases of Cast AI helm-charts.

Deleted agent

If you delete the Cast AI agent deployment from the cluster, you can reinstall it by rerunning the script from the Connect cluster screen. Please ensure you choose the correct cloud service provider.

Cluster controller is receiving a forbidden access error

In some scenarios, during multiple onboarding attempts, failing updates, or other issues, the cluster token used by the cluster controller can get invalidated. By becoming forbidden from accessing the Cast AI API, it fails to operate the cluster.

To renew it, you should run the following Helm commands:

helm repo update

helm upgrade -i cluster-controller castai-helm/castai-cluster-controller -n castai-agent \

--set castai.apiKey=$CASTAI_API_TOKEN \

--set castai.clusterID=<your-cluster-id>Your cluster does not appear on the Connect Cluster screen

If the cluster does not appear on the Connect your cluster screen after you've run the connection script, perform the following steps:

1. Check agent container logs:

```shell

kubectl logs -n castai-agent -l app.kubernetes.io/name=castai-agent -c agent

```2. You might get output similar to this:

```text

time="2021-05-06T14:24:03Z" level=fatal msg="agent failed: registering cluster: getting cluster name: describing instance_id=i-026b5fadab5b69d67: UnauthorizedOperation: You are not authorized to perform this operation.\n\tstatus code: 403, request id: 2165c357-b4a6-4f30-9266-a51f4aaa7ce7"

```

or

```text

time="2021-05-06T14:24:03Z" level=fatal msg=agent failed: getting provider: configuring aws client: NoCredentialProviders: no valid providers in chain"

```

or

```text

time="2023-08-18T18:44:49Z" level=error msg="agent failed: getting provider: configuring aws client: getting instance region: EC2MetadataRequestError: failed to get EC2 instance identity document\ncaused by: RequestError: send request failed\ncaused by: Get \"http://169.254.169.254/latest/dynamic/instance-identity/document\": context deadline exceeded (Client.Timeout exceeded while awaiting headers)"These errors indicate that the Cast AI agent failed to connect to the AWS API. The reason may be that your cluster's nodes and/or workloads have custom-constrained IAM permissions, or the IAM roles are removed entirely.

However, the Cast AI agent requires read-only access to the AWS EC2 API to correctly identify some properties of your EKS cluster. Access to the AWS EC2 Metadata endpoint is optional, but the variables discovered from the endpoint must be provided.

The Cast AI agent uses the official AWS SDK, which supports all variables for customizing authentication, as mentioned in its documentation.

Provide cluster metadata by adding these environment variables to the Cast AI agent deployment:

- name: EKS_ACCOUNT_ID

value: "000000000000" # your AWS account ID

- name: EKS_REGION

value: "eu-central-1" # your EKS cluster region

- name: EKS_CLUSTER_NAME

value: "staging-example" # your EKS cluster nameIf you're instead using GCP GKE, you can provide the following environment variables to overcome the lack of access to VM metadata:

- name: GKE_PROJECT_ID

value: your_project_id

- name: GKE_CLUSTER_NAME

value: your_cluster_name

- name: GKE_REGION

value: your_cluster_region

- name: GKE_LOCATION

value: your_cluster_azThe Cast AI agent requires read-only permissions, so the default AmazonEC2ReadOnlyAccess is sufficient. Provide AWS API access by adding these variables to the Cast AI Agent secret:

AWS_ACCESS_KEY_ID = xxxxxxxxxxxxxxxxxxxx

AWS_SECRET_ACCESS_KEY = xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxHere is an example of a Cast AI agent deployment and secret with all the mentioned environment variables:

# Source: castai-agent/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: castai-agent

namespace: castai-agent

labels:

app.kubernetes.io/name: castai-agent

app.kubernetes.io/instance: castai-agent

app.kubernetes.io/version: "v0.23.0"

app.kubernetes.io/managed-by: castai

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: castai-agent

app.kubernetes.io/instance: castai-agent

template:

metadata:

labels:

app.kubernetes.io/name: castai-agent

app.kubernetes.io/instance: castai-agent

spec:

priorityClassName: system-cluster-critical

serviceAccountName: castai-agent

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: "kubernetes.io/os"

operator: In

values: [ "linux" ]

- matchExpressions:

- key: "beta.kubernetes.io/os"

operator: In

values: [ "linux" ]

containers:

- name: agent

image: "us-docker.pkg.dev/castai-hub/library/agent:v0.24.0"

imagePullPolicy: IfNotPresent

env:

- name: API_URL

value: "api.cast.ai"

- name: PPROF_PORT

value: "6060"

- name: PROVIDER

value: "eks"

# Provide values discovered via AWS EC2 Metadata endpoint:

- name: EKS_ACCOUNT_ID

value: "000000000000"

- name: EKS_REGION

value: "eu-central-1"

- name: EKS_CLUSTER_NAME

value: "castai-example"

envFrom:

- secretRef:

name: castai-agent

resources:

requests:

cpu: 100m

limits:

cpu: 1000m

- name: autoscaler

image: k8s.gcr.io/cpvpa-amd64:v0.8.3

command:

- /cpvpa

- --target=deployment/castai-agent

- --namespace=castai-agent

- --poll-period-seconds=300

- --config-file=/etc/config/castai-agent-autoscaler

volumeMounts:

- mountPath: /etc/config

name: autoscaler-config

volumes:

- name: autoscaler-config

configMap:

name: castai-agent-autoscaler# Source: castai-agent/templates/secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: castai-agent

namespace: castai-agent

labels:

app.kubernetes.io/instance: castai-agent

app.kubernetes.io/managed-by: castai

app.kubernetes.io/name: castai-agent

app.kubernetes.io/version: "v0.23.0"

data:

# Keep API_KEY unchanged.

API_KEY: "xxxxxxxxxxxxxxxxxxxx"

# Provide an AWS Access Key to enable read-only AWS EC2 API access:

AWS_ACCESS_KEY_ID: "xxxxxxxxxxxxxxxxxxxx"

AWS_SECRET_ACCESS_KEY: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"Alternatively, if you use IAM roles for service accounts, you can annotate the castai-agent service account instead of providing AWS credentials with your IAM role.

kubectl annotate serviceaccount -n castai-agent castai-agent eks.amazonaws.com/role-arn="arn:aws:iam::111122223333:role/iam-role-name"Spot nodes show as On-demand in the cluster's Available Savings page

See this section.

Disconnected or Not responding cluster

If the cluster has a Not responding status, most likely the Cast AI agent deployment is missing. Press Reconnect and follow the instructions provided.

The Not responding state is temporary, and unless fixed, the cluster will enter the Disconnected state. If your cluster is disconnected, you can reconnect or delete it from the console, as shown below.

The delete action only removes the cluster from the Cast AI console, leaving it running in the cloud service provider.

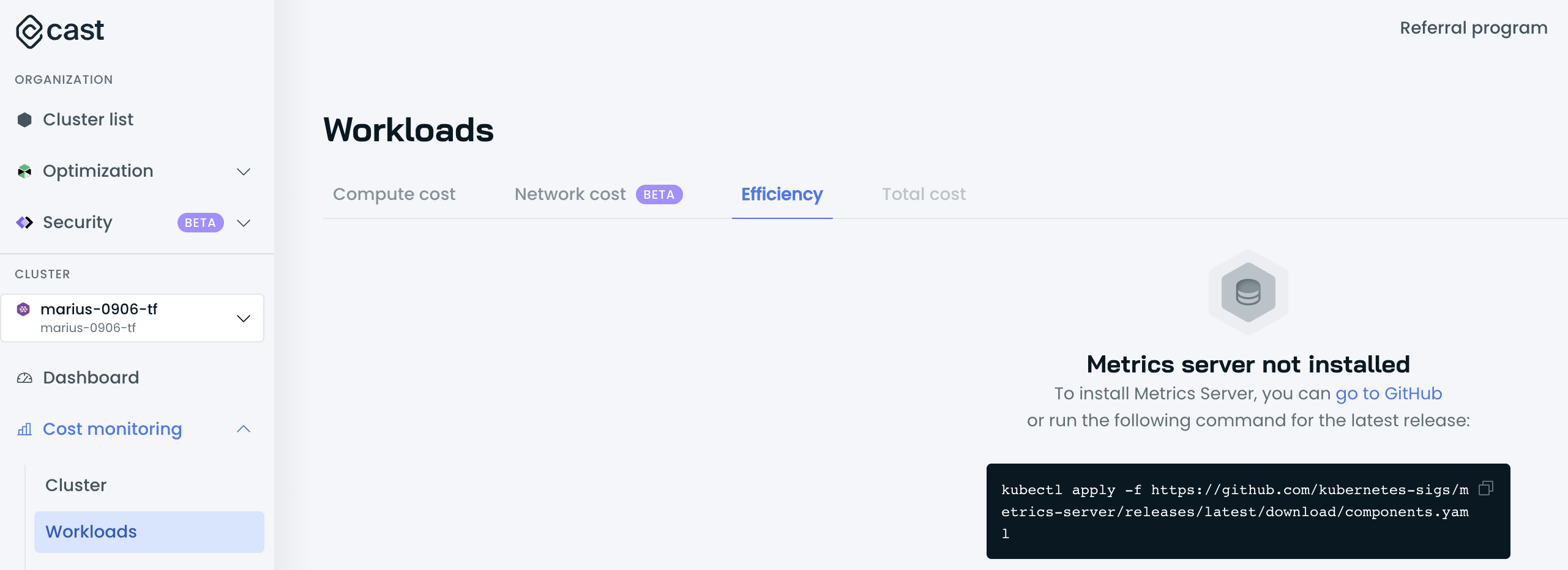

Cannot access the Workloads Efficiency tab

It could be the case that the Cast AI agent cannot discover and poll metrics from the Metrics Server.

Validate whether the Metrics Server is running and is accessible by running the following commands:

kubectl get deploy,svc -n kube-system | egrep metrics-serverIf Metrics Server is installed, the output is similar to the following example:

deployment.extensions/metrics-server 1/1 1 1 3d4h

service/metrics-server ClusterIP 198.51.100.0 <none> 443/TCP 3d4hIf the Metrics Server is not running, follow the installation process here.

If the Metrics Server is running, verify that the Metrics Server is returning data for all pods by issuing the following command:

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/pods"The output should be similar to the one below:

{"kind":"PodMetricsList","apiVersion":"metrics.k8s.io/v1beta1","metadata":{},

"items":[

{"metadata":{"name":"castai-agent-84d48f88c9-44tr8","namespace":"castai-agent","creationTimestamp":"2023-03-15T12:28:12Z","labels":{"app.kubernetes.io/instance":"castai-agent","app.kubernetes.io/name":"castai-agent","pod-template-hash":"84d48f88c9"}},"timestamp":"2023-03-15T12:27:36Z","window":"27s","containers":[{"name":"agent","usage":{"cpu":"5619204n","memory":"75820Ki"}}]}

]}If no output or erroneous output is returned, review the configurations of your Metrics Server and/or reinstall it.

If those checks passed, look for any unavailable APIs with the following command:

kubectl get apiservicesThe output should be similar to the following example:

NAME SERVICE AVAILABLE AGE

v1. Local True 383d

v1.acme.cert-manager.io Local True 161d

v1.admissionregistration.k8s.io Local True 383d

v1.apiextensions.k8s.io Local True 383d

v1.apps Local True 383d

v1.authentication.k8s.io Local True 383d

v1.authorization.k8s.io Local True 383d

v1.autoscaling Local True 383d

v1.batch Local True 383dAll API services must show True in the available column. Any that show False will need to be repaired if it's still in use by one of your workloads. If it is not still in use, the API service can be deleted.

If everything looks good, but you still cannot access the Workloads Efficiency tab, please contact our support.

Updated 5 months ago