Scaling policies

Scaling policies enable centralized management of vertical workload autoscaling across your Kubernetes workloads. These policies define how Cast AI's Workload Autoscaler should optimize CPU and memory resource requests for your pods, allowing you to apply consistent optimization strategies across multiple workloads simultaneously.

When you enable Workload Autoscaler, all workloads are automatically profiled and assigned to appropriate system scaling policies based on their labels, types, and namespaces. This intelligent assignment ensures immediate optimization coverage without manual intervention, making onboarding faster and simpler.

How scaling policies work

Vertical scaling policies control how the Workload Autoscaler analyzes resource usage patterns and applies optimization recommendations to your workloads. Each policy defines:

- Resource optimization targets - Which percentiles of historical usage to target for CPU and memory recommendations

- Automation behavior – Whether to automatically apply recommendations or wait for manual approval

- Scaling modes – When to apply changes (immediately with pod restarts or during natural restart events)

- Safety constraints – Minimum and maximum resource limits to prevent over- or under-provisioning

- Container exclusions – Which specific containers to exclude from automatic optimization

- Timing settings – How much historical data to consider and when to trigger optimizations

These policies ensure your workloads receive appropriate resource allocations based on their actual usage patterns, leading to improved cost efficiency and performance.

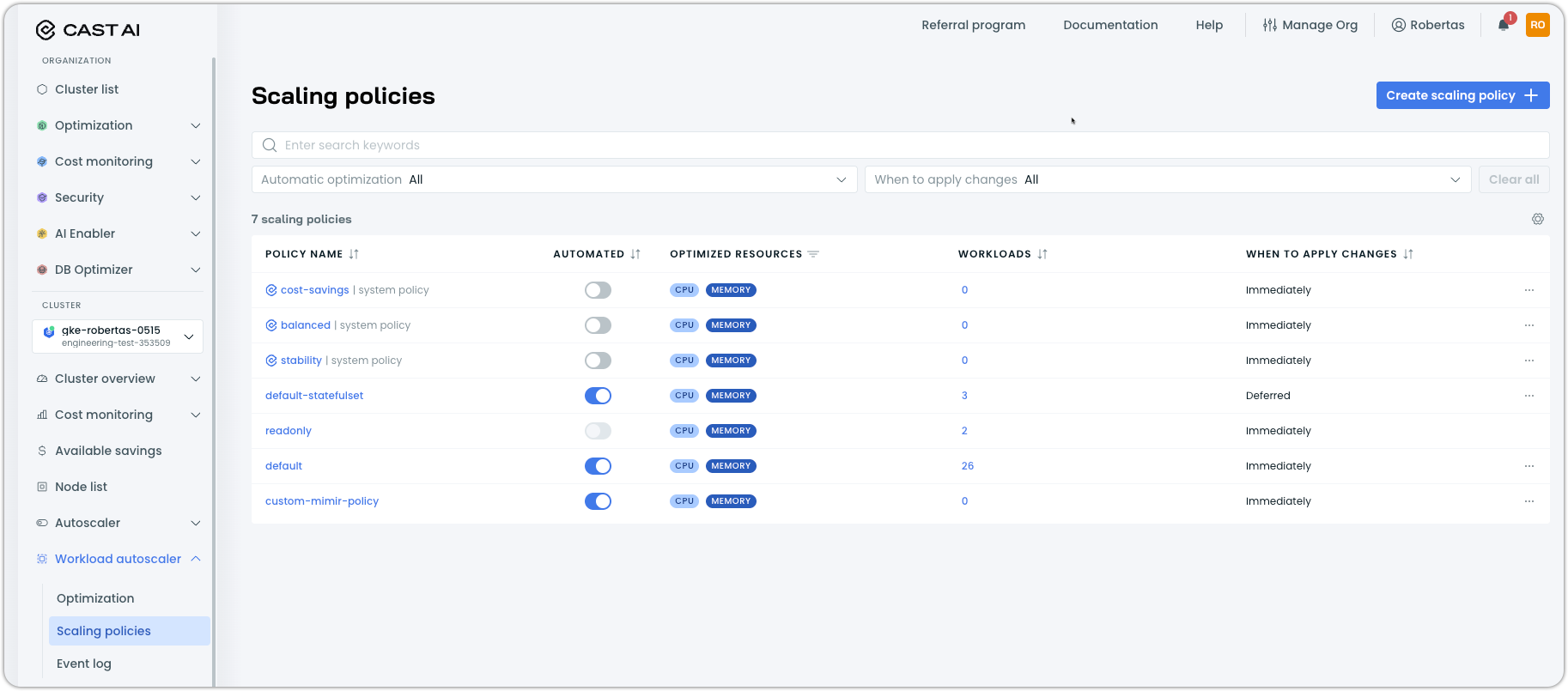

Accessing scaling policies

To access and manage your scaling policies:

- Select your cluster in the Cast AI console

- From the left-hand menu, navigate to Workload Autoscaler

- Select Scaling policies

This dedicated scaling policies page provides a comprehensive view of all your policies, including system policies and custom policies, along with their automation status, optimized resources, associated workloads, and when changes to resource requests will be applied.

System policies

Cast AI provides predefined system policies designed for common optimization scenarios. These policies are pre-configured with settings based on Cast AI's extensive experience with Kubernetes workload optimization:

- resiliency: StatefulSets are assigned to this policy by default

- balanced: Provides an optimal balance between cost optimization and stability/performance

- cost-savings: Maximizes cost efficiency while maintaining acceptable performance

- stability: Prioritizes consistent workload performance with minimal disruption

- burstable: Designed to add compute power when needed, then scale back down to save costs; well suited for Jobs, CronJobs, or other job-like workloads

- readonly: Reserved for Cast AI components (cannot be modified)

- default (deprecated): The standard configuration applied to new workloads

- default-statefulset (deprecated): Specialized configuration optimized for StatefulSet workloads

Note

defaultanddefault-statefulsetpolicies are now deprecated. While they may still appear in clusters onboarded prior to July 18th, 2025, newly onboarded clusters rely on the automatic profiling system to assign workloads to more appropriate system policies.

While system policies cannot be directly modified, you can duplicate any system policy (except readonly) to create a fully customizable version with the same starting configuration. This provides an excellent foundation for creating policies tailored to your specific requirements.

Automatic workload profiling

Cast AI automatically profiles all workloads and assigns them to the most appropriate system scaling policies based on:

- Workload labels - Application tier, environment, and other identifying labels

- Workload types - Deployment, StatefulSet, DaemonSet, CronJob, etc.

- Namespaces - System namespaces, application namespaces, and naming patterns

This intelligent profiling happens instantly when the cluster is onboarded, ensuring optimal policy assignment from the start. The only remaining step is to enable automation on the policies to begin applying recommendations.

Enabling scaling behavior

You can enable vertical scaling for your workloads in two ways:

Globally via the scaling policy: Enable "Automatically optimize workloads" in the policy settings. This enables scaling only for workloads with sufficient historical data. Workloads without enough data are monitored and automatically enabled once adequate usage patterns are established.

Directly from individual workloads: Enable optimization on specific workloads through the workload interface. Once enabled, autoscaling begins immediately based on the scaling mode configured in the associated policy.

For clusters with automatic workload profiling, workloads are already assigned to appropriate policies, so you only need to enable automation to begin optimization.

Gradual scaling for new clustersFor clusters onboarded within the last 24 hours, workloads enabled via scaling policies automatically follow gradual scaling logic with progressive optimization limits. See Mark of recommendation confidence.

Next steps

To get started with scaling policies:

- Create scaling policies - Learn how to create custom policies with specific optimization settings for your workloads

- Manage scaling policies - Discover how to apply policies to workloads, manage policy assignments, and maintain your optimization strategy

- Workload autoscaling configuration - Explore detailed configuration options and advanced settings for fine-tuning your optimization approach

By effectively using scaling policies, you can streamline workload management and ensure consistent optimization across your cluster while maintaining the flexibility to customize settings for specific use cases.

Updated 18 days ago