Cast AI Operator

Automated lifecycle management for Cast AI components

The Cast AI Operator (castware-operator) automates the installation, configuration, and updating of Cast AI components in your Kubernetes cluster. The Operator reduces manual effort by managing component lifecycles through a single, self-updating control plane component.

What the Operator manages

The Cast AI Operator manages the following components:

Phase 1 components:

castai-agent: Cluster monitoring and data collectionspot-handler: Spot Instance interruption monitoring and reporting

Phase 2 components:

cluster-controller: Executes cluster management operations and Container Live Migration

Additional components are coming soon: evictor, pod-mutator, pod-pinner, and other Cast AI components will be managed by the Operator in future releases.

The Operator automatically:

- Installs the latest versions of managed components

- Applies updates through the Cast AI console with a single click

- Self-upgrades when new Operator versions are released

- Migrates existing component installations when the Operator is added to a cluster

Architecture

The Operator runs as a single pod in the castai-agent namespace and uses minimal resources:

- Memory: Typically less than 256 MB

- CPU: Typically less than 0.5 CPU

kubectl get pods -n castai-agent | grep -i operator

NAME READY STATUS RESTARTS AGE

castware-operator-8667b6744d-kmqnn 1/1 Running 0 10mThe Operator uses Kubernetes Custom Resources (CRs) to manage configuration:

- Cluster CR: Global configuration shared across components (API URLs, cloud provider, cluster metadata)

- Component CRs: Individual component configuration (version, Helm values, enablement status)

Permissions

The Cast AI Operator requires Kubernetes permissions to install and manage Cast AI components. The Operator's permissions are structured in two levels:

Phase 1 permissions: Required to install and manage Phase 1 components (castai-agent and spot-handler). These permissions include:

- Read-only cluster-scoped permissions to generate cost and optimization recommendations

- Write permissions within the

castai-agentnamespace to create and manage component resources - DaemonSet management permissions to deploy and update

spot-handler - Install and update the

castai-agentandspot-handlercomponents

Extended permissions (Phase 2): Additional permissions required to install and manage components that modify cluster behavior. When you enable automation (Phase 2), the Operator receives extended permissions that allow it to:

- Install and manage

cluster-controllerfor cluster operations and Container Live Migration - Create, update, and delete cluster-scoped resources required by Phase 2 components

- Manage additional Phase 2 components as they become Operator-supported

The extended permissions are requested through the extendedPermissions:true flag in the Phase 2 onboarding script.

The Operator follows Kubernetes privilege escalation prevention mechanisms: it can only create roles and bindings with permissions it already has, preventing unauthorized privilege escalation.

For a detailed breakdown of specific permissions, see the Operator permissions reference.

Installation

New cluster onboarding

Phase 1 (read-only mode)

For new clusters onboarded via the Cast AI Console, the Operator is installed automatically during Phase 1 onboarding:

- The onboarding script installs

castware-operator - The Operator pod restarts once after generating certificates (this is expected behavior)

- The Operator then installs the latest versions of

castai-agentandspot-handler - The cluster remains in

Connecting...status until all components are successfully installed:

kubectl get pods -n castai-agent -w

NAME READY STATUS RESTARTS AGE

castware-operator-8667b6744d-kmqnn 1/1 Running 0 93s

castai-agent-74989f5596-knfrs 2/2 Running 0 38s

castai-agent-74989f5596-vnqvk 2/2 Running 0 38s

castai-agent-cpvpa-964fc94b6-n5dkq 1/1 Running 0 38s

castai-spot-handler-xk7pm 1/1 Running 0 38s

NoteSome onboarding journeys (such as AI Enabler or Cloud Connect) do not include the Operator by default. In these cases, components are installed using the traditional method, but you can enable the Operator later via Component Control.

Existing clusters

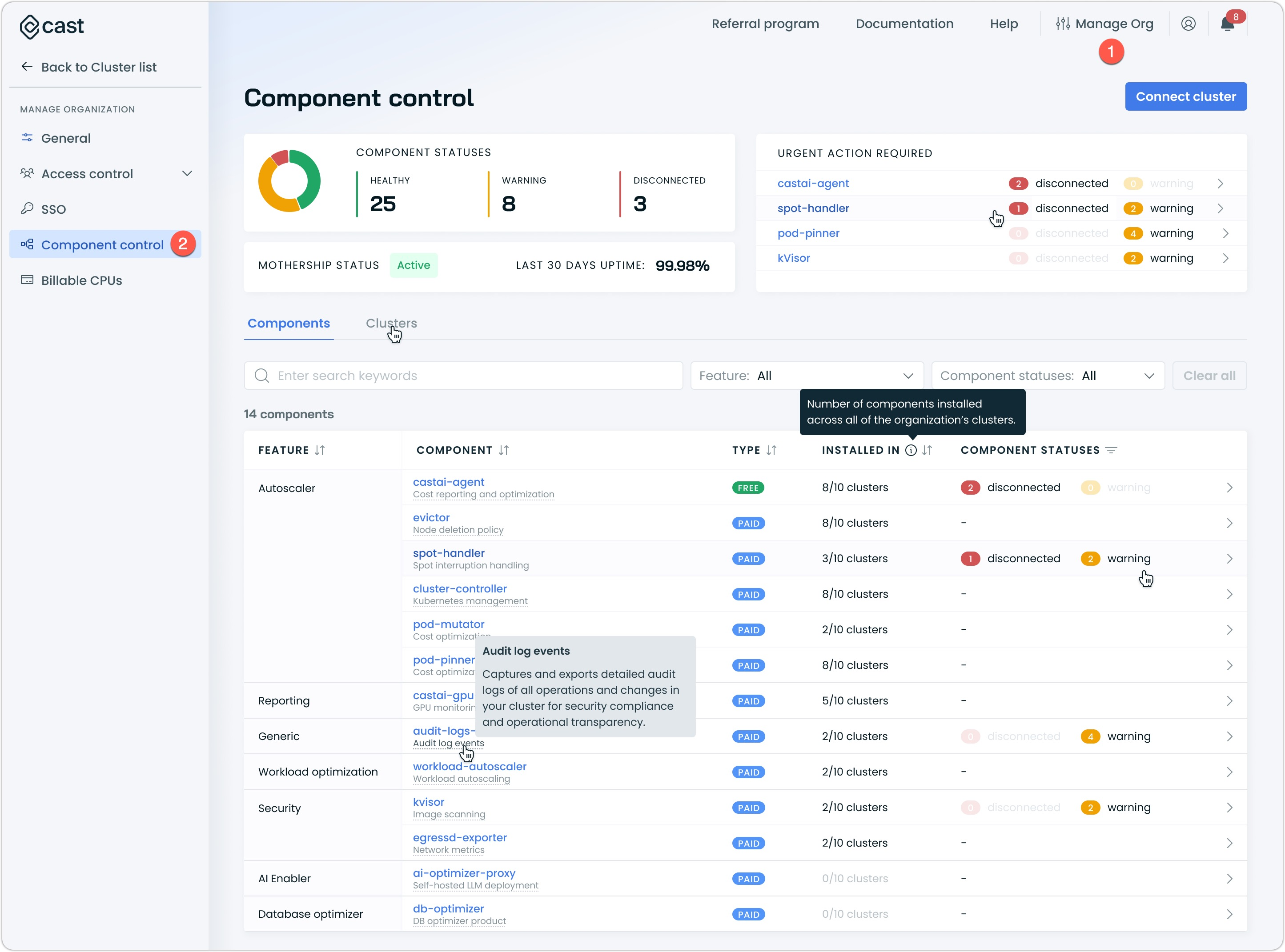

For clusters already onboarded to Cast AI, you can enable the Operator in three ways:

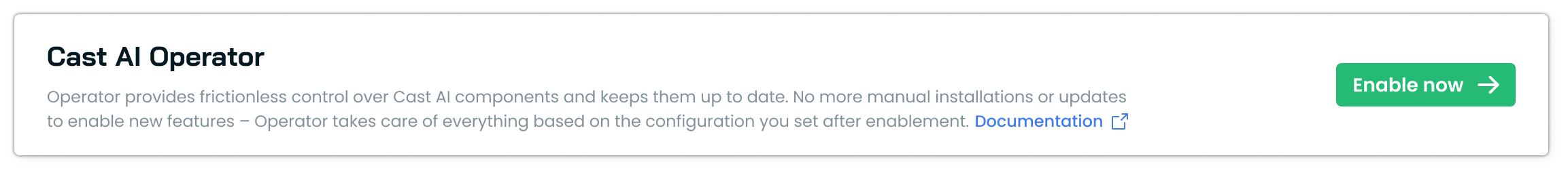

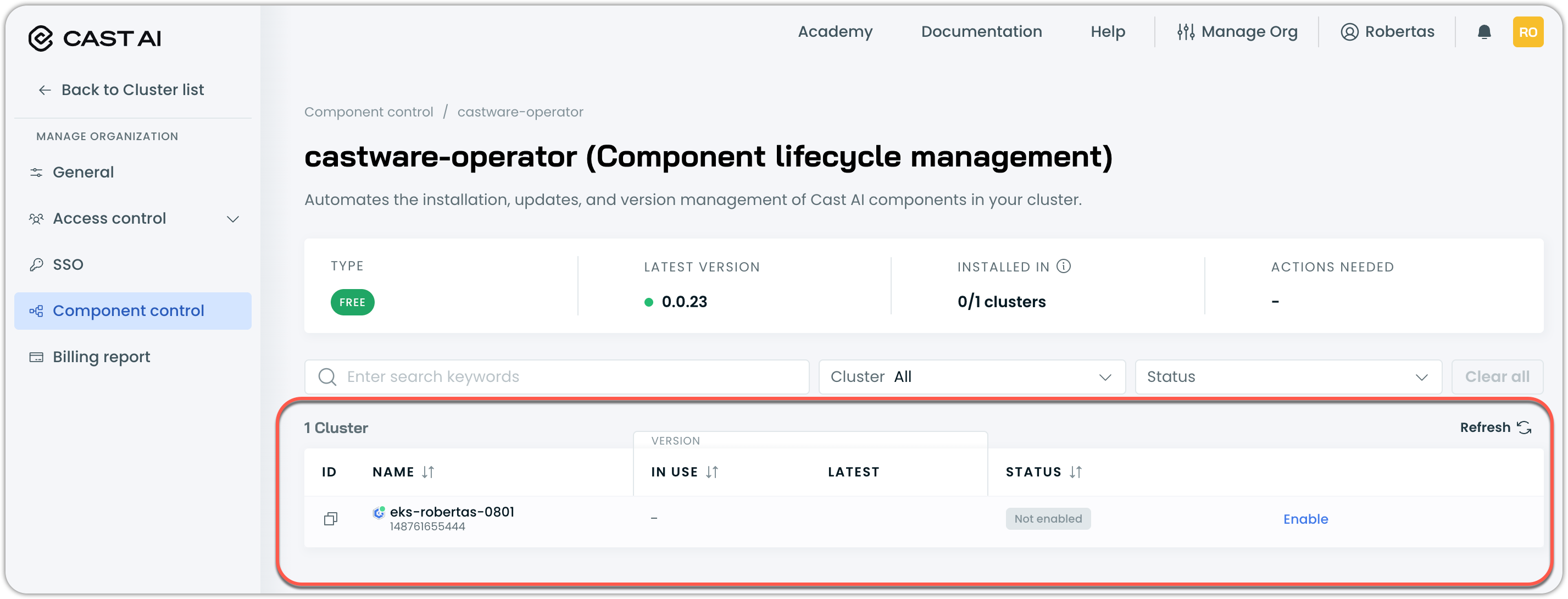

Option 1: Component Control (recommended)

-

In the Cast AI console, select Manage Organization in the top right

-

Navigate to Component control in the left menu

-

Click Enable Now → in the Operator widget

-

Select the cluster where you want to install the Operator and click Enable

-

Copy and run the provided installation script

The console-provided Helm script handles cluster registration and automatic migration of existing castai-agent installations.

Option 2: Manual installation via Helm

For manual installation or automation scenarios:

helm repo add castai-helm https://castai.github.io/helm-charts

helm repo update castai-helm

helm upgrade --install castware-operator castai-helm/castware-operator \

--namespace castai-agent \

--create-namespace \

--set apiKeySecret.apiKey="<your-api-key>" \

--set defaultCluster.provider="<eks|gke|aks>" \

--set defaultCluster.api.apiUrl="https://api.cast.ai" \

--set defaultComponents.enabled=false \

--set defaultCluster.terraform=false \

--atomic \

--waitParameters:

apiKeySecret.apiKey: Your Cast AI Full Access API key (required)defaultCluster.provider: Cloud provider -eks,gke, oraks(required)defaultCluster.api.apiUrl: Cast AI API endpoint (usehttps://api.eu.cast.aifor EU region)defaultComponents.enabled: Automatically installcastai-agentafter Operator installation (defaults totrue; set tofalsefor existing clusters withcastai-agentinstalled already)defaultCluster.terraform: Set tofalsewhen installing via Helm or console (defaults totrue; only set totruewhen using Terraform-based installation)defaultCluster.migrationMode: How existing components should be migrated -write(migrate and manage),autoUpgrade(migrate, manage, and upgrade), orread(detect only, no management). Defaults towrite

Migrating existing castai-agent installations:

When you install the Operator on a cluster with an existing castai-agent, the Operator automatically detects and migrates the agent based on the migrationMode setting:

write(default): Migrates existing components to Operator management, preserving current versionsautoUpgrade: Migrates components and immediately upgrades them to the latest versionsread: Detects existing components without taking over management (read-only monitoring)

The Operator handles both installation types:

- Helm-installed agents: Creates a Component CR matching the current version and takes over management

- YAML manifest agents: Extracts configuration, creates a Component CR, and converts to Helm-managed

The migration process:

- Detects the existing

castai-agentdeployment - Extracts configuration (environment variables, resource settings, version)

- Creates a Component CR with detected configuration

- Updates the agent's API key secret reference to use the Operator-managed secret

- Takes over lifecycle management

Migration impactDuring migration, the agent may restart once, causing 1-2 cluster snapshots to be skipped. This is normal and does not affect cluster functionality.

Option 3: During castai-agent update

castai-agent updateWhen updating castai-agent, the console prompts you to install the Operator:

- Open the

castai-agentupdate drawer - Copy the update script (Operator installation is included by default)

- Run the script to install the Operator, which will in turn upgrade

castai-agent

Opt-out optionThe update drawer includes an option to opt out of Operator installation if needed.

Terraform installation

Starting from version 0.0.26, the Cast AI Operator can be onboarded and managed via Terraform.

Required permissions for Terraform installations

When installing the Operator via Terraform, the castware-components chart (which contains the Custom Resources that the Operator uses) requires a RoleBinding for a pre-installation job. This job verifies that the Operator deployment and at least one pod are running before creating Custom Resources (CRs). The RoleBinding grants these permissions:

apiGroups: ["apps"]

resources: ["deployments"]

verbs: ["get", "list"]

apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list", "watch"]For the complete RBAC configuration, see the Operator templates in GitHub: https://github.com/castai/castware-operator/blob/main/charts/castai-castware-components/templates/check-operator-job-rbac.yaml

Configuration and examples

For configuration details and up-to-date examples, see the Cast AI Terraform Provider repository: https://github.com/castai/terraform-provider-castai/tree/master/examples/castware-operator

Configuring cluster metadata when IMDS is unavailable

The Cast AI Operator requires access to cloud provider metadata to identify cluster properties. By default, the Operator uses the cloud provider's Instance Metadata Service (IMDS). However, in environments where IMDS is restricted or unavailable (such as private networks, air-gapped clusters, or security-hardened environments), you must provide cluster metadata through environment variables.

When to use this configuration

Configure environment variables when:

- IMDS endpoints are blocked by network policies or firewall rules

- Running in environments without access to cloud provider metadata services

- Security policies prevent metadata service access

- The Operator fails with region detection or account identification errors

Required environment variables by cloud provider

- name: EKS_ACCOUNT_ID

value: "<your-aws-account-id>" # your AWS account ID

- name: EKS_REGION

value: "<your-eks-region>" # your EKS cluster region

- name: EKS_CLUSTER_NAME

value: "<your-cluster-name>" # your EKS cluster nameConfiguration methods

Using Helm values file

Create a values.yaml file with the required environment variables for your cloud provider:

# values.yaml

additionalEnv:

EKS_ACCOUNT_ID: "<your-aws-account-id>"

EKS_REGION: "<your-eks-region>"

EKS_CLUSTER_NAME: "<your-cluster-name>"Install or upgrade the Operator with the values file:

helm upgrade --install castware-operator castai-helm/castware-operator \

--namespace castai-agent \

--create-namespace \

--set apiKeySecret.apiKey="<your-api-key>" \

--set defaultCluster.provider="eks" \

--set defaultCluster.api.apiUrl="https://api.cast.ai" \

-f values.yamlUsing inline Helm parameters

helm upgrade --install castware-operator castai-helm/castware-operator \

--namespace castai-agent \

--create-namespace \

--set apiKeySecret.apiKey="<your-api-key>" \

--set defaultCluster.provider="eks" \

--set defaultCluster.api.apiUrl="https://api.cast.ai" \

--set additionalEnv.EKS_ACCOUNT_ID="<your-aws-account-id>" \

--set additionalEnv.EKS_REGION="<your-eks-region>" \

--set additionalEnv.EKS_CLUSTER_NAME="<your-cluster-name>"Updating existing Operator installations

If the Operator is already installed and experiencing IMDS-related failures, update the deployment:

helm upgrade castware-operator castai-helm/castware-operator \

--namespace castai-agent \

--reuse-values \

--set additionalEnv.EKS_ACCOUNT_ID="<your-aws-account-id>" \

--set additionalEnv.EKS_REGION="<your-eks-region>" \

--set additionalEnv.EKS_CLUSTER_NAME="<your-cluster-name>"Verifying the configuration

After applying the environment variables, verify the Operator is running correctly:

kubectl get pods -n castai-agent -l app.kubernetes.io/name=castware-operatorCheck the Operator logs for successful startup without metadata-related errors:

kubectl logs -n castai-agent -l app.kubernetes.io/name=castware-operatorUpdating components

Semi-automated updates

Once the Operator is installed, you can update both the Operator and castai-agent through the Cast AI console without running additional scripts.

Updating the Operator

- Navigate to Component Control

- Open the Cast AI Operator widget

- Click Update when a new version is available

- The Operator performs a self-upgrade

Updating castai-agent

castai-agent- Navigate to Component Control

- Locate

castai-agentin the component list - Click Update

- The Operator handles the upgrade process automatically

Best practiceAlways update both the Operator and managed components to the latest verified versions to ensure compatibility and access to the newest features.

Automatic CRD upgrades

Starting with version 0.0.26, the Operator automatically upgrades Custom Resource Definitions (CRDs) during installation and upgrades. This ensures that CRD schemas stay synchronized with the Operator version.

How it works

The Operator uses a Helm pre-install/pre-upgrade hook to update CRDs before the main Operator deployment starts. This process:

- Creates a dedicated ServiceAccount with minimal CRD management permissions

- Runs a temporary job that applies updated CRD definitions for

clusters.castware.cast.aiandcomponents.castware.cast.ai - Completes the upgrade and cleans up automatically

The CRD upgrade job uses a separate ServiceAccount with permissions scoped only to CRD management, following the principle of least privilege. The entire process completes in seconds during normal operation.

Expected behavior on first upgrade

When upgrading to version 0.0.26+ from an earlier version, you may see this warning in the upgrade job logs:

Warning: resource customresourcedefinitions/clusters.castware.cast.ai is missing the

kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply.This warning is normal and expected. It appears because:

- The CRDs were originally installed via Helm's

crds/directory - Helm doesn't add the

last-applied-configurationannotation - The upgrade job adds this annotation automatically

- Future upgrades won't show this warning

As long as you see "configured" for both CRDs and "CRDs applied successfully", the upgrade worked correctly.

Future capabilities

The Operator will soon manage additional Cast AI components. Some enhancements may require additional permissions:

- When clicking Update for the Operator, you may be prompted to run a short script to grant required permissions

- These permissions enable the Operator to handle installation and upgrades for other Cast AI components

See Kubernetes permissions for more information.

Removing the Operator

Before you remove

Removing the Operator stops automated component management. After removal:

castai-agentand other managed components continue running normally- You'll need to use manual Helm commands or console scripts to update components

- Custom Resources (CRs) and Custom Resource Definitions (CRDs) are removed from the cluster

Uninstall via Helm

To completely remove the Operator and its associated resources:

# Uninstall the Operator

helm uninstall castware-operator -n castai-agent

ImportantUninstalling the Operator does not remove

castai-agentor other Cast AI components. These components continue operating independently. If you want to fully remove all Cast AI components, see the cluster disconnection documentation.

Console behavior after removal

After manually uninstalling the Operator:

- The console shows the Operator as enabled until the next sync cycle (~15 minutes)

- Component update prompts may still reference the Operator temporarily

- Use manual Helm commands, Terraform, or console-provided scripts to manage components

NoteUI-based Operator disconnection and re-enablement is planned for future releases.

Troubleshooting

Cluster stuck in Connecting status

Symptom: Cluster remains in Connecting... status for more than 10 minutes after running the onboarding script.

Cause: The Operator may have installed successfully, but castai-agent failed to deploy.

Solution:

-

Check the status of both the Operator and the agent:

kubectl get pods -n castai-agent -

Check Operator logs for errors:

kubectl logs -l app.kubernetes.io/name=castware-operator -n castai-agent -

If

castai-agentis not running, check the Component CR status:kubectl get component castai-agent -n castai-agent -o yaml -

If the status doesn't change after 10 minutes, try the Enable button in Component Control; alternatively, if that fails, rerun the installation script

-

If the issue persists, contact Cast AI Customer Success

Component updates failing

Symptom: Clicking Update for a component results in an error, or the update doesn't complete.

Cause: The component may require additional permissions that the Operator doesn't have, or there may be a configuration conflict.

Solution:

-

Check the Component CR status for error messages:

kubectl get component <component-name> -n castai-agent -o yaml -

Review Operator logs during the update attempt:

kubectl logs -l app.kubernetes.io/name=castware-operator -n castai-agent --tail=50 -

If permission errors appear, the Operator may need additional RBAC permissions. Contact Cast AI Customer Success

Migration from existing agent not working

Symptom: After installing the Operator, the existing castai-agent is not migrated automatically.

Possible causes:

- The agent deployment doesn't have the required labels or annotations (due to modifications)

- The agent was installed using a non-standard method

- The Operator doesn't have sufficient permissions to modify the existing deployment

Solution:

-

Check if the agent has Helm labels:

kubectl get deployment castai-agent -n castai-agent -o jsonpath='{.metadata.labels}' -

Check if a Component CR was created:

kubectl get component castai-agent -n castai-agent -

If migration fails repeatedly, contact Cast AI Customer Success with the agent deployment details

Failed Operator uninstall

Symptom: Running helm uninstall castware-operator fails or hangs, preventing removal of the Operator.

Cause: Helm hooks may be blocking the uninstall process, or there may be finalizers preventing resource deletion.

Solution:

-

Uninstall without running Helm hooks:

helm -n castai-agent uninstall castware-operator --no-hooks -

Manually remove Custom Resources:

kubectl delete clusters.castware.cast.ai --all -n castai-agent kubectl delete components.castware.cast.ai --all -n castai-agent -

Remove Custom Resource Definitions:

kubectl delete crd clusters.castware.cast.ai kubectl delete crd components.castware.cast.ai -

Verify all resources are removed:

kubectl get all -n castai-agent | grep castware-operator

Cause: The dedicated CRD upgrade ServiceAccount lacks necessary permissions, or the ClusterRole/ClusterRoleBinding wasn't created properly.

Solution:

-

Verify the CRD upgrade ServiceAccount exists:

kubectl get serviceaccount castware-operator-crd-upgrade -n castai-agent -

Verify the CRD upgrade ClusterRole exists and has correct permissions:

kubectl get clusterrole castware-operator-crd-upgrade -o yaml -

Check the ClusterRoleBinding:

kubectl get clusterrolebinding castware-operator-crd-upgrade -o yaml -

If any resources are missing or incorrect, reinstall the Operator which will recreate these resources:

helm upgrade --install castware-operator castai-helm/castware-operator \ --namespace castai-agent \ --set apiKeySecret.apiKey="your-api-key"

Related documentation

- Component Control: View and manage all Cast AI components

- Helm Charts: Manual component installation using Helm

- Cluster Onboarding: Connect new clusters to Cast AI

Updated 2 months ago