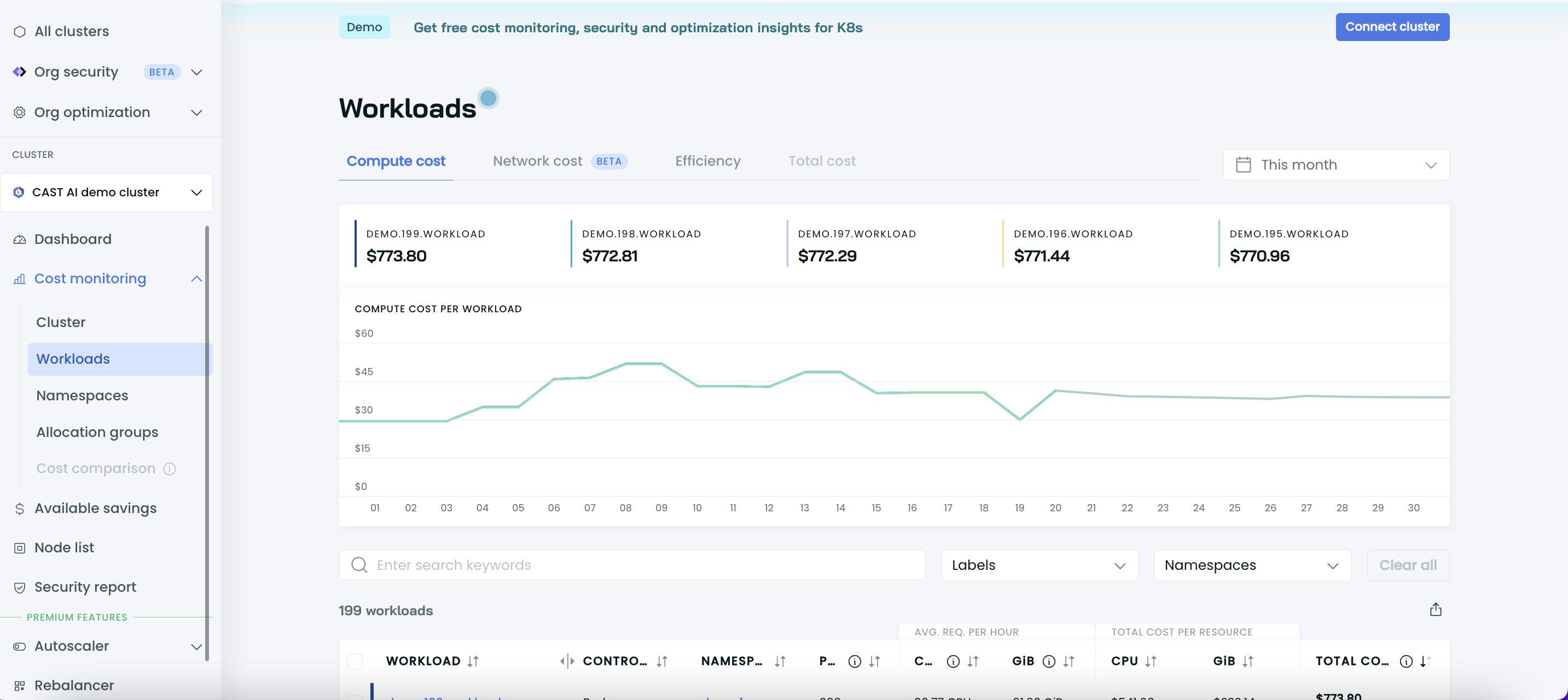

Workloads

The Workloads report allows you to see your cluster costs broken down by workload. You can use this data to analyze compute expenses per workload or selected group of workloads, identify inefficiencies, and discover potential savings opportunities.

The Workloads report provides four different views:

- List of workloads with cost details per selected period,

- List of workloads with efficiency details,

- Individual workload cost details with daily history,

- Individual workload efficiency details with daily history.

List of workloads with cost details per selected period

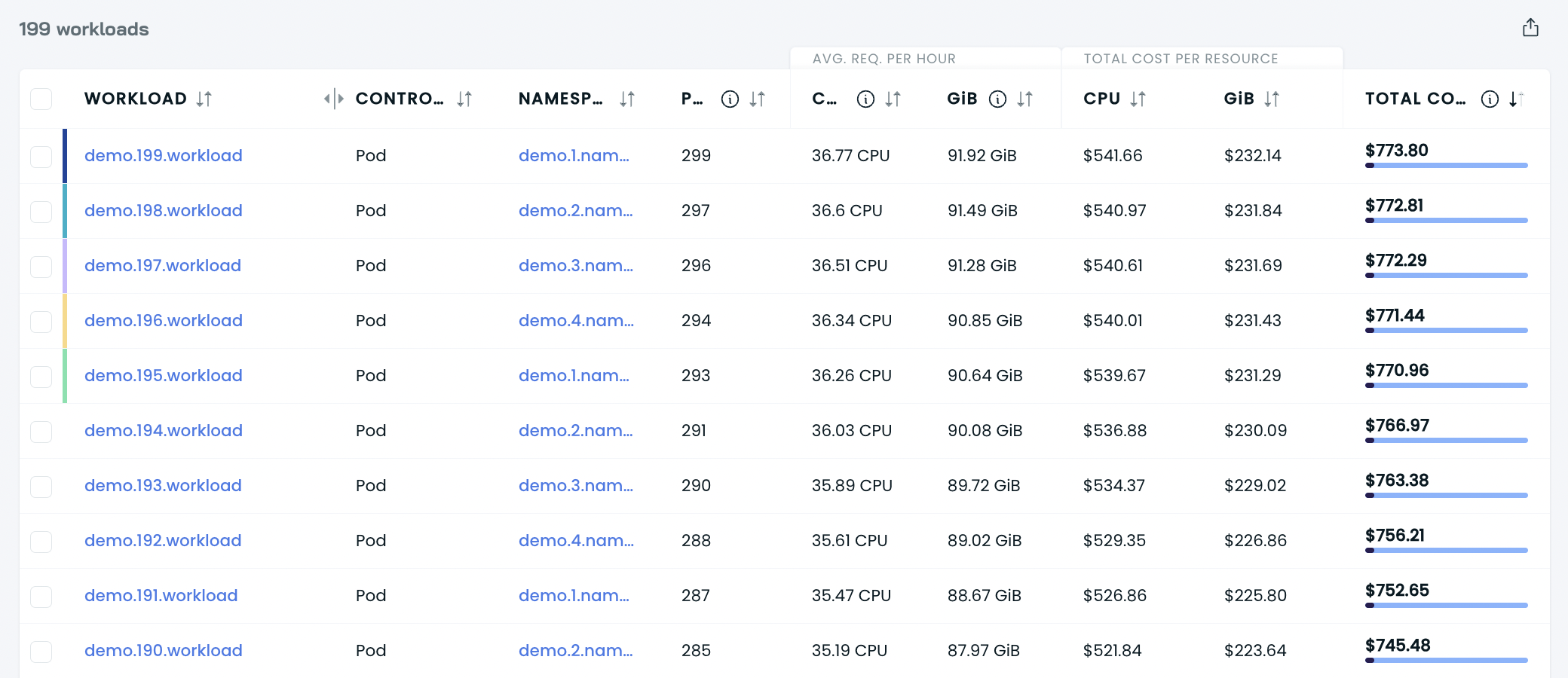

This is a list of all workloads in your cluster, together with their cost details shown within the period you chose.

Each entry contains the following:

- workload name,

- workload controller type,

- namespace,

- average number of pods,

- average requested CPU per hour,

- average requested RAM per hour,

- total cost of CPU,

- total cost of RAM,

- the total cost of compute.

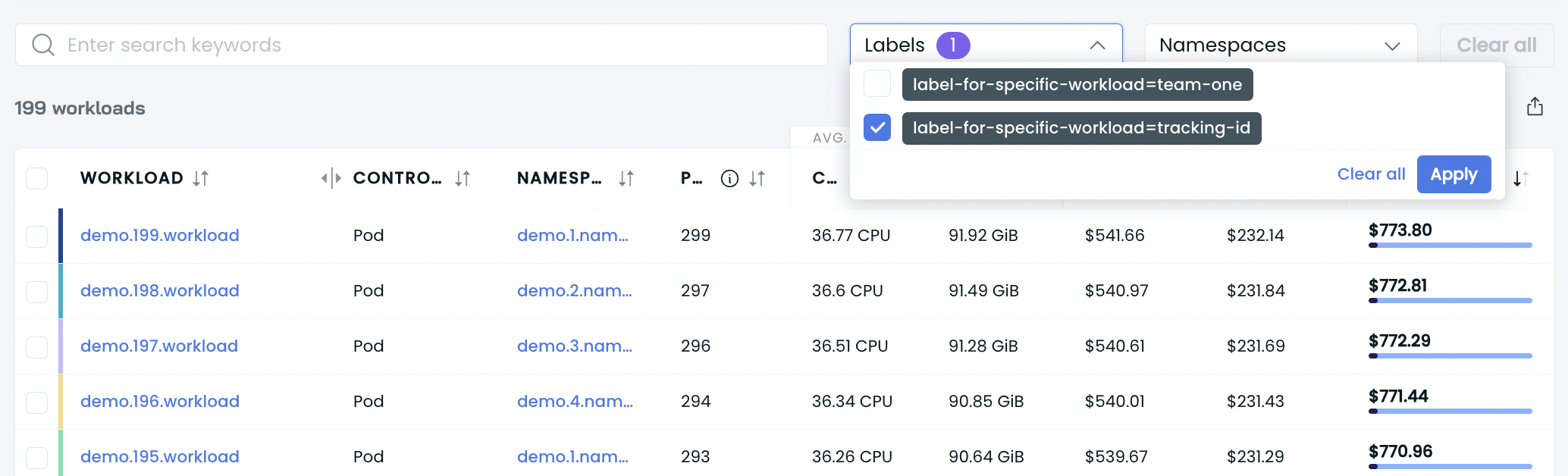

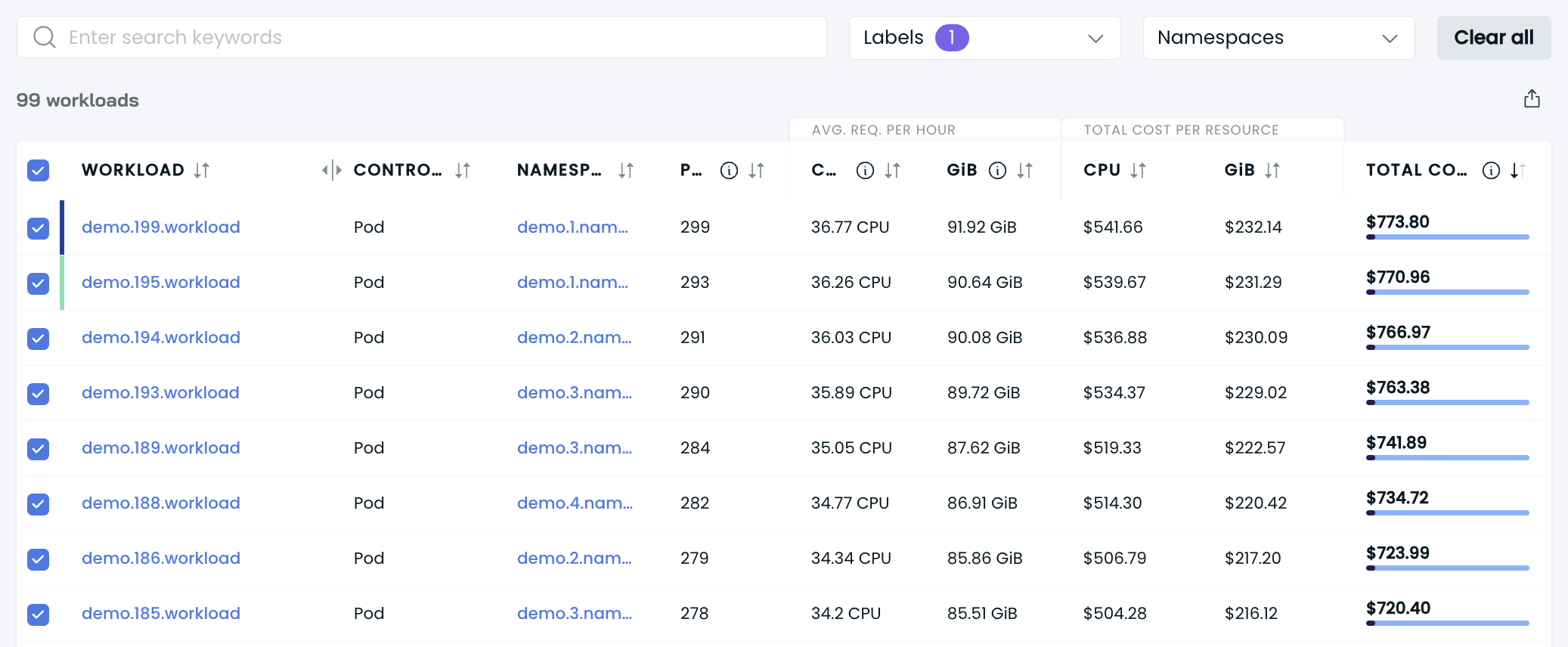

You can filter workloads by their labels and namespaces.

To see the total cost of multiple workloads, select the relevant workloads by ticking the box on the left side of the table. You’ll get the total cost data for this group of workloads at the bottom.

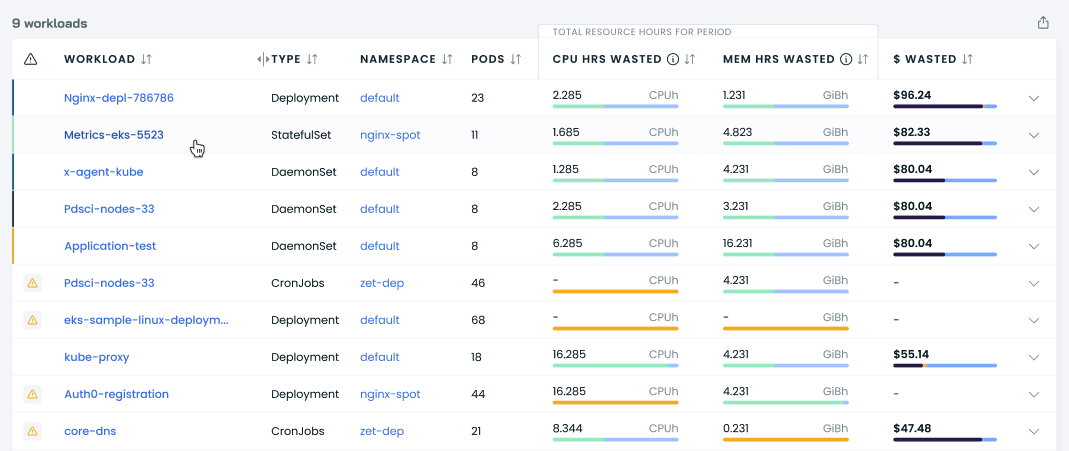

List of workloads with efficiency details

This is a list of all cluster workloads with their efficiency details per selected period.

Each entry contains the following:

- workload name,

- workload controller type,

- namespace,

- CPU hours wasted,

- memory hours wasted,

- $ wasted (the amount of money wasted, in US dollars).

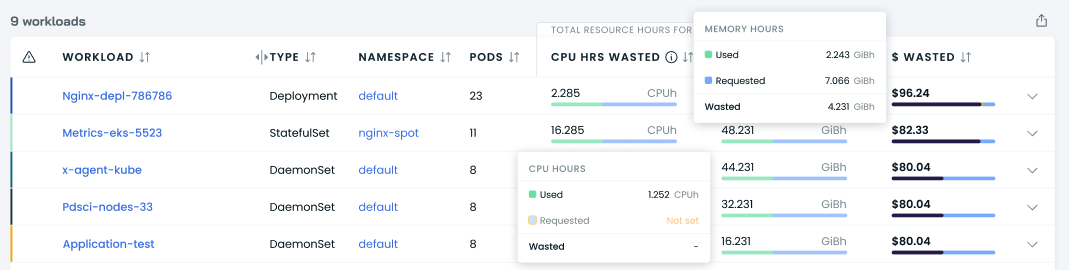

Each entry also contains the requested and used resource hours.

The metric we use here is resource hours, which corresponds to resources multiplied by hours of usage.

For example, if a workload with requests set to 2 CPUs runs for 48 hours during the selected period, its total requested CPU hours would be 96 CPU hours. If a workload's average CPU usage is 0.5 CPU during those 48 hours, its total usage is 24 CPU hours.

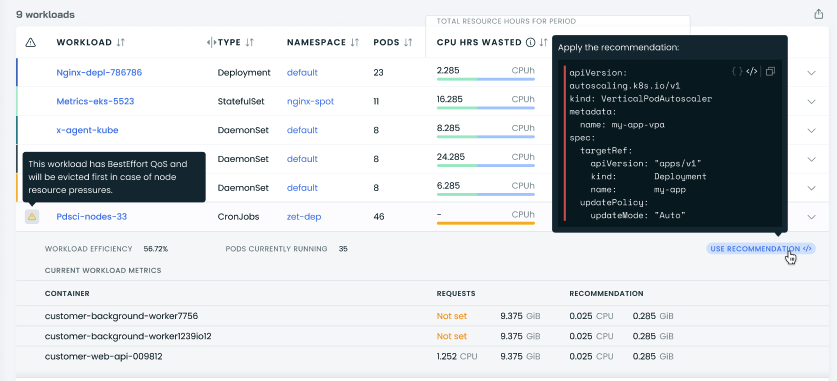

CAST AI managed mode customers can also use a quick recommendations patch that they can apply to their workload and change workload resource requests in line with our recommendations.

How do we calculate the wasted CPUs, RAM, and money?

To calculate the number of wasted CPU and RAM resources, we subtract the workload’s used resources from the total number of requested resources.

To follow the example above:

96 CPU hours - 24 CPU hours = 72 CPU hours

NoteFor better recommendations and information on efficiency, set the requests based on the workloads within your cluster.

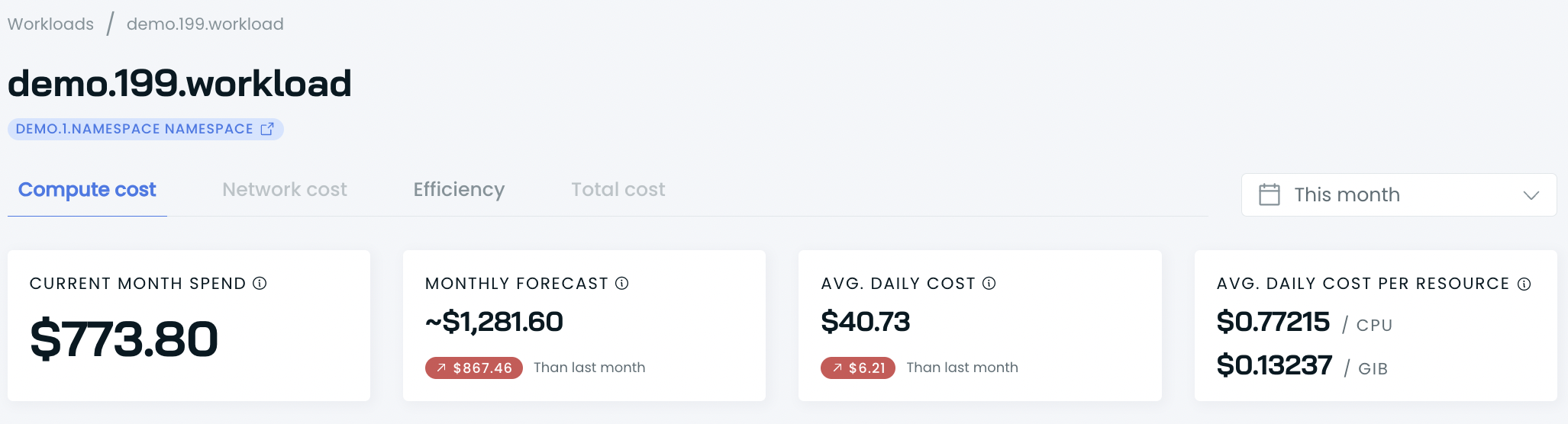

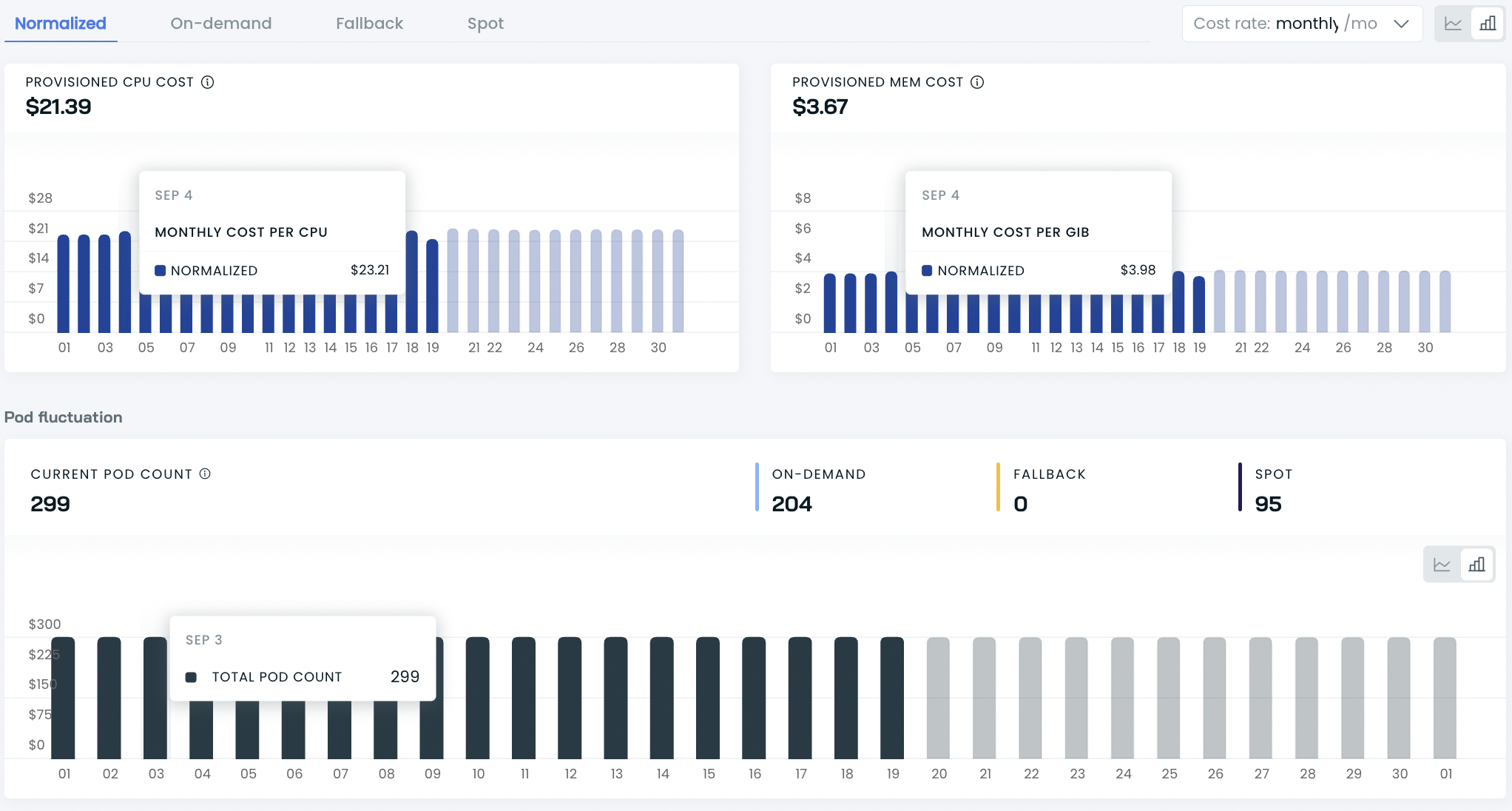

Individual workload cost details with daily history

This report provides cost details and the history of a single workload within the selected period.

You get the following data:

- total spend,

- current month forecast,

- average daily cost,

- average daily cost per resource (CPU and RAM).

Daily compute spend

You can also check the daily chart for compute spending per resource and per resource offering.

Daily cost history table

Daily cost history table with average pod count, cost per pod, amount of requested resources, cost per resource, and total cost.

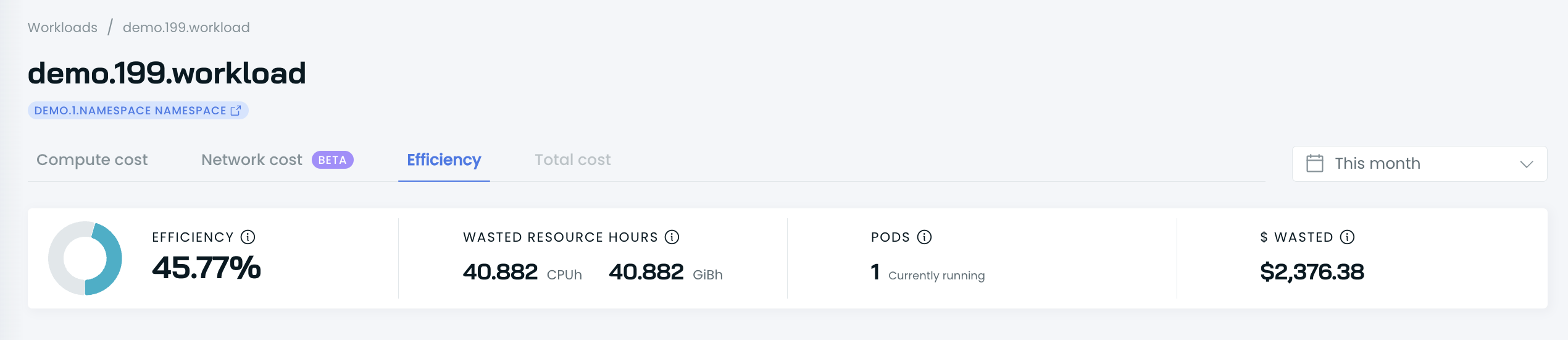

Individual workload efficiency details with daily history

This report provides efficiency details for a single workload: both current and within the selected period.

In the top section, the report provides efficiency details for a workload:

- Efficiency score in %

- Wasted resource hours both for CPU and memory

- $ wasted in US dollars

- Pod count that are currently running

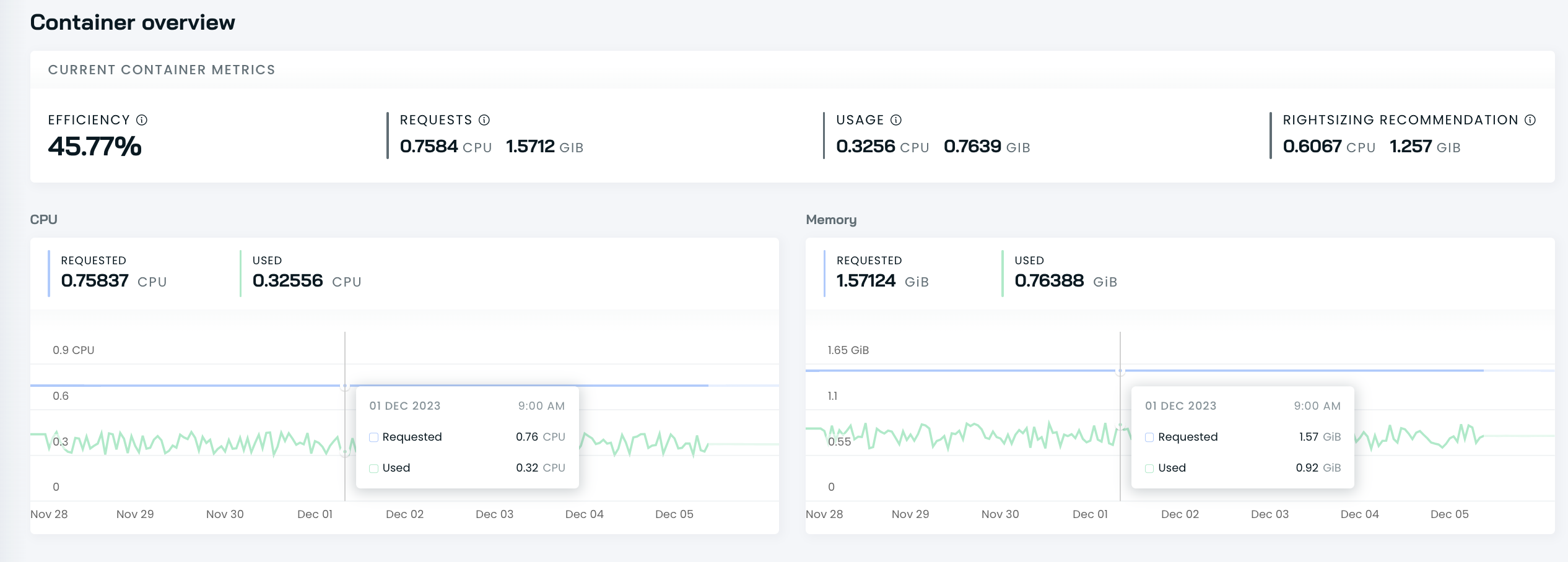

Current workload efficiency

The current efficiency part provides current efficiency details per container:

-

resource requests,

-

resource usage,

-

rightsizing recommendation,

-

computed overall efficiency.

Computed efficiency is calculated by comparing resource requests against the recommended rightsized resource values. CPU is more expensive and has a larger impact on efficiency ratings. Learn more about this here.

For rightsizing recommendations, the Cost monitoring module analyzes the resource use of a container during the last 5 days and calculates the percentile value (95th percentile for CPU and 99th percentile for RAM).

NoteUsage metrics are based on metrics-server which in some rare cases could lead to incorrect CPU usage data due to Kernel bug. In case you experience such issue please consider upgrading metrics-server to v0.7.0 or above.

Workload grouping

Each line item in the workload report corresponds to a distinct workload running within the cluster. Typically, these workloads are represented by top-level Kubernetes objects, such as Deployments, DaemonSets, StatefulSets, etc.

CAST AI performs an analysis of all the pods and their associated controllers, identifying the top-level controller for each pod. It then aggregates all pods under their respective top-level controllers into a single entry in the workload report. This approach ensures a clear and organized representation of cluster workloads.

CAST AI supports default and well-known Kubernetes controllers. In instances where pods don't have an associated controller or if the controller is not recognized, it categorizes these pods under separate entries. This ensures comprehensive coverage and visibility of all Pods within the cluster, regardless of their controller status.

Issues with dynamic pods

In instances where clusters utilize unsupported controllers or frameworks that dynamically create pods for batch jobs or other operations, the default grouping mechanism will not work. This can lead to a significant number of separate entries in the workload report, each corresponding to an individual pod.

These short-lived pods will lack historical data and efficiency calculations, making it challenging to gauge their performance and cost impact accurately.

CAST AI provides a few options to group pods in such scenarios:

Add a label for name override

The workload name can be overridden by adding a CAST AI-specific label "reports.cast.ai/name". If this label is found on the pod, its value will be used for the workload name. All pods with the same value will be aggregated under a single item.

Configure grouping rules

If adding a label for the override is not viable or convenient, there's another option is to specify conditional rules for grouping pods. Currently, this functionality is available through API only.

Grouping rules are based on labels assigned to pods. Users can define specific conditions that need to be met for a pod to be included in a particular group. Group name can be determined by a static string value or dynamically assigned from a specified label.

Grouping rule schema

{

"conditions": [

{

"label": "string",

"operator": "enum",

"values": ["string"]

}

],

"fixedName": "string",

"label": "string"

}The conditions array can have multiple conditions matched before applying the rule. Each condition consists of the following properties:

- label: Specifies the label to be used for condition evaluation.

- operator: Determines conditional operator to be applied to the label. Possible values are:

In: The label's value must match one of the values specified in the values array.NotIn: The label's value must not match any of the values in the values array.Exists: The label exists, regardless of its value.DoesNotExist: The label doesn't exist.

- values: Contains the values to be matched against the label's value. This is used with the In and NotIn operators.

For determining the name of a group specify either fixedName or label:

fixedName: this value will be directly used for the workload name.label: workload name will be assigned dynamically by taking a value of specified label on the Pod.

Example grouping rule

This rule would aggregate pods having a label "app" with a value "frontend" or "backend". Pods with a label "app" : "frontend" would be grouped under a name “frontend”, and pods with "app" : “backend” under a name "backend".

{

"clusterId": "00000000-0000-0000-0000-000000000000",

"config": {

"rules": [

{

"conditions": [

{

"label": "app",

"operator": "In",

"values": ["frontend", "backend"]

}

],

"label": "app"

}

]

}

}Updated 6 days ago