Autoscaler preparation checklist

After successfully enabling Cast AI's automation features, turning on and testing the following aspects will help you ensure the platform's autoscaling engine operates properly.

Further fine-tuning might be necessary for specific use cases.

Goals

- Upscale the cluster during peak hours.

- Binpack or downscale the cluster when excess capacity is no longer required.

- Use Spot Instances to reduce your infrastructure costs, but have the safety of on-demand instances when needed.

Recommended setup

The following section describes the Autoscaling policy setup needed to achieve these goals.

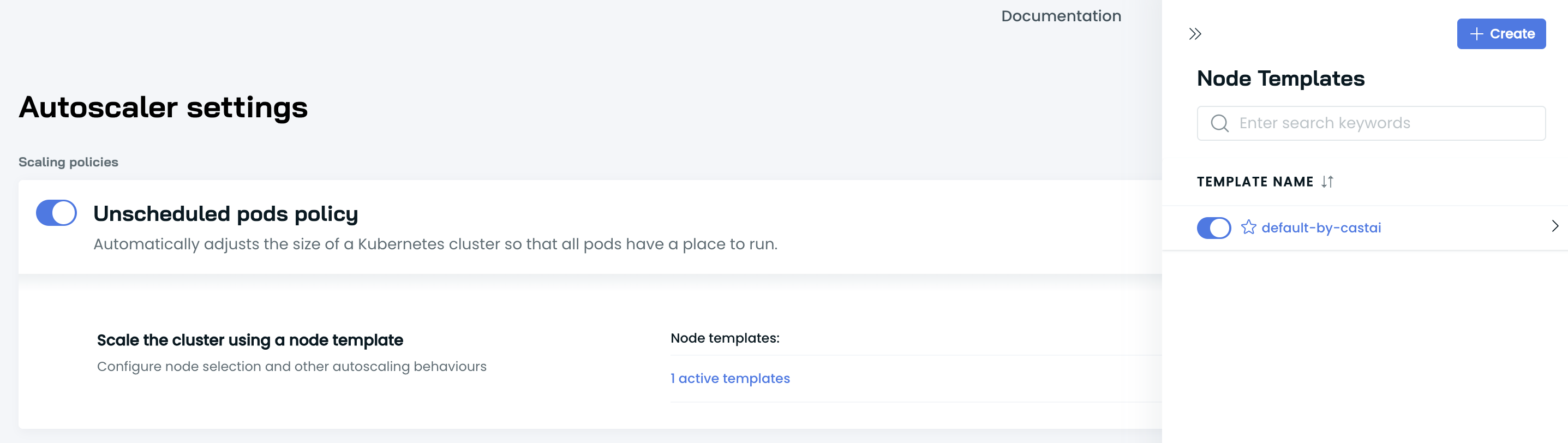

Unscheduled pods policy

To upscale a cluster, Cast AI needs to react to unschedulable pods. You can achieve this by turning on the Unscheduled Pods policy and configuring the Default Node template.

What is an unschedulable pod?This term refers to a pod stuck in a pending state, meaning that it cannot be scheduled onto a node. Generally, this is because insufficient resources of one type or another prevent scheduling.

Unscheduled pods policy with Default Node template

Why?

✅ Automatically add the required capacity to the cluster.

✅ Enable Spot instances to allow CAST AI to handle spot instances & their interruptions.

✅ Enable Spot Fallbacks to automatically switch back and forth to the on-demand capacity when spots are not available in the cloud environment.

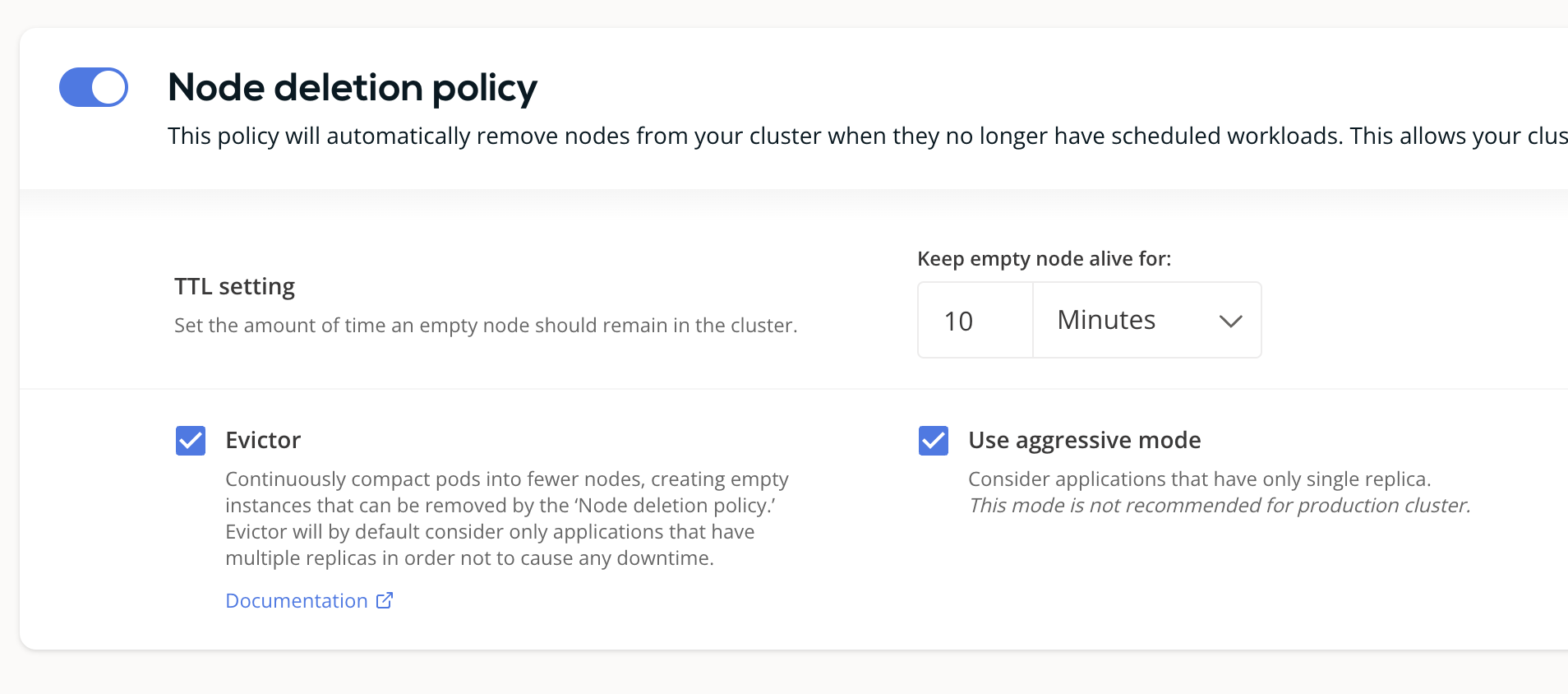

Node deletion policy / Evictor

CAST AI can constantly binpack the cluster and remove any excess capacity. To achieve this goal, we recommend the following initial setup:

Node deletion policy recommended settings

Why?

✅ Ensure that empty nodes are not running in the cluster for longer than the configured duration.

✅ Enable Evictor for higher node resource utilization & less waste. Evictor continuously simulates scenarios where it tries to eliminate underutilized nodes by checking if the pods could be scheduled in the remaining capacity. Simulation respects PDBs and all the other K8s restrictions that your applications may have.

What is Evictor's aggressive mode?When Evictor runs in aggressive mode, it considers workloads with a single replica as potential targets for binpacking. This might cause some disruption in single-replica workloads.

Testing

After completing the basic setup, we recommend performing a simple upscale/downscale test to verify that the autoscaler functions correctly and that the cluster can scale up and down as needed.

➡️ First, deploy the following spot workload to check if the autoscaler reacts to the need for spot capacity:

kubectl apply -f https://raw.githubusercontent.com/castai/examples/main/evictor-demo-pods/test_pod_spot.yaml# https://github.com/castai/examples/blob/main/evictor-demo-pods/test_pod_spot.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: castai-test-spot

namespace: castai-agent

labels:

app: castai-test-spot

spec:

replicas: 10

selector:

matchLabels:

app: castai-test-spot

template:

metadata:

labels:

app: castai-test-spot

spec:

tolerations:

- key: scheduling.cast.ai/spot

operator: Exists

nodeSelector:

scheduling.cast.ai/spot: "true"

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

resources:

requests:

cpu: 4✅ This step ensures that Cast AI has all the relevant access to upscale your cluster automatically.

➡️ Once the capacity is added, check that your desired DaemonSet pod count matches the node count.

# Get DaemonSets in all namespaces

kubectl get ds -A

# Get Node count

kubectl get nodes | grep -v NAME | wc -l➡️ Verify that the deployed pod is Running, then run:

kubectl scale deployment/castai-test-spot --replicas=0✅ Verify that Cast AI eliminates empty nodes in the configured time interval.

Troubleshooting

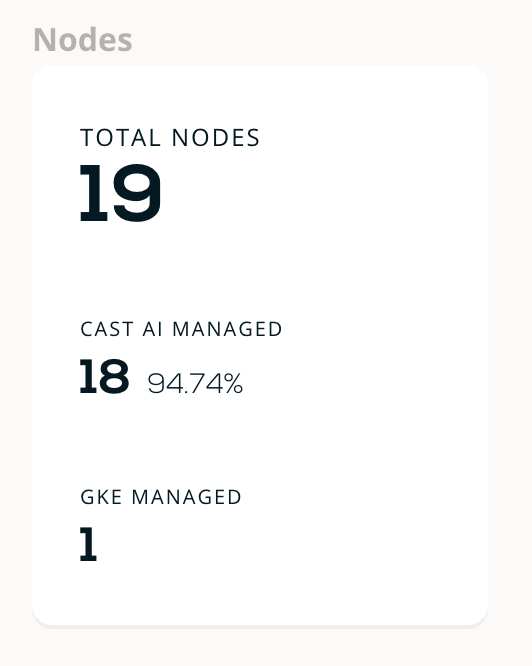

Partially managed by Cast AI

One node not managed by Cast AI

- In some situations, when you connect a cluster, it may not be immediately fully managed by Cast AI. This means that some workloads still run on existing legacy node pools/Autoscaling groups.

- We recommend adding

autoscaling.cast.ai/removal-disabled="true"on such node pools/Autoscaling groups so that Cast AI can exclude such nodes from the Evictor & Rebalancing features.

Updated 4 months ago