AWS PrivateLink

Connecting to Cast AI through AWS PrivateLink

Cast AI PrivateLink enables EKS clusters without internet access to securely communicate with Cast AI using AWS PrivateLink technology. This solution is designed for clusters where nodes cannot reach the public internet through NAT gateways or similar methods.

Reference ImplementationThis guide is based on the Cast AI PrivateLink AWS repository, which contains the latest Terraform examples, implementation details, and updates. Refer to the repository for the most current information and code samples.

Prerequisites

- An EKS cluster in a VPC with private subnets

- Subnets tagged with

cast.ai/routable = trueto indicate they can communicate with Cast AI - Kubectl access to your cluster (either direct or through a bastion host)

- A private AWS ECR for hosting Cast AI container images

- Terraform installed (if using the Infrastructure as Code (IaC) approach)

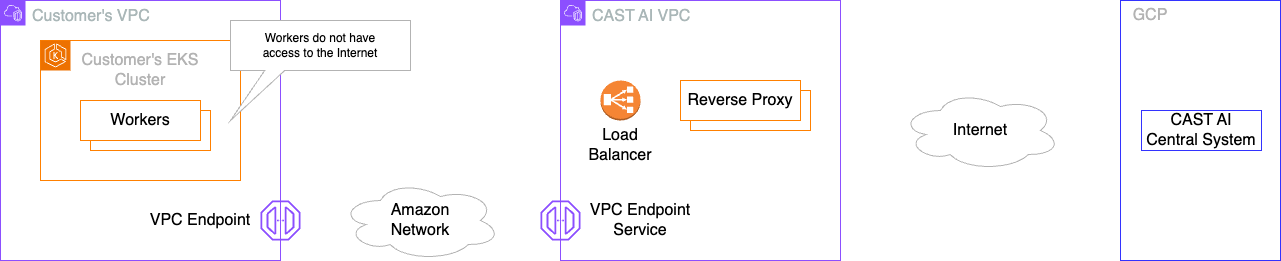

Architecture

Cast AI PrivateLink uses VPC endpoints to establish private connectivity between your EKS cluster and the Cast AI platform. This connection allows Cast AI agents to communicate with the central system without traversing the public internet.

Setup Process

Step 1: Tag your subnets

Add the cast.ai/routable tag to all subnets where your EKS cluster nodes will run:

aws ec2 create-tags \

--resources subnet-xxxxx \

--tags Key=cast.ai/routable,Value=trueThis tag informs Cast AI that these subnets have connectivity to the Cast AI platform (through the VPC endpoints you'll create in the next step).

For more information on tagging AWS resources, see Tag your Amazon EC2 resources in the AWS documentation.

Step 2: Create VPC endpoints

Create VPC endpoints in your VPC to enable private access to Cast AI services. The required endpoints vary by region.

Choose your region and create the corresponding VPC endpoints.

US East (N. Virginia) - us-east-1

| Endpoint Type | Service Name |

|---|---|

| REST API | com.amazonaws.vpce.us-east-1.vpce-svc-0f648001d494b9a46 |

| gRPC for Pod Pinner | com.amazonaws.vpce.us-east-1.vpce-svc-05e9e206a737781a7 |

| API via gRPC | com.amazonaws.vpce.us-east-1.vpce-svc-00d55140e30124b2f |

| Downloading artifacts | com.amazonaws.vpce.us-east-1.vpce-svc-0b611f59ee5494cc3 |

US East (Ohio) - us-east-2

| Endpoint Type | Service Name |

|---|---|

| REST API | com.amazonaws.vpce.us-east-2.vpce-svc-0504dcd21f12037fe |

| gRPC for Pod Pinner | com.amazonaws.vpce.us-east-2.vpce-svc-0681d838ee3b5e496 |

| API via gRPC | com.amazonaws.vpce.us-east-2.vpce-svc-0678ed50f02956c7f |

| Downloading artifacts | com.amazonaws.vpce.us-east-2.vpce-svc-0d1fad36f0230d84c |

Asia Pacific (Mumbai) - ap-south-1

| Endpoint Type | Service Name |

|---|---|

| REST API | com.amazonaws.vpce.ap-south-1.vpce-svc-07c813d8840493bcf |

| gRPC for Pod Pinner | com.amazonaws.vpce.ap-south-1.vpce-svc-028ad7f3e7879c47c |

| API via gRPC | com.amazonaws.vpce.ap-south-1.vpce-svc-0dd30c956893d640e |

| Downloading artifacts | com.amazonaws.vpce.ap-south-1.vpce-svc-0c4777e1cc92bf8a8 |

Asia Pacific (Hyderabad) - ap-south-2

| Endpoint Type | Service Name |

|---|---|

| REST API | com.amazonaws.vpce.ap-south-2.vpce-svc-0a2a5307bb44fab88 |

| gRPC for Pod Pinner | com.amazonaws.vpce.ap-south-2.vpce-svc-00d1d87c025531c96 |

| API via gRPC | com.amazonaws.vpce.ap-south-2.vpce-svc-009c9d91819071294 |

| Downloading artifacts | com.amazonaws.vpce.ap-south-2.vpce-svc-05b723b65a7f5a0f9 |

NoteIf your region is not listed, contact Cast AI support to confirm availability and obtain the VPC endpoint service names.

Using Terraform

Refer to the Cast AI PrivateLink AWS repository for Terraform examples that automate VPC endpoint creation.

Using AWS CLI

Create each VPC endpoint using the AWS CLI:

aws ec2 create-vpc-endpoint \

--vpc-id vpc-xxxxx \

--vpc-endpoint-type Interface \

--service-name com.amazonaws.vpce.us-east-1.vpce-svc-0f648001d494b9a46 \

--subnet-ids subnet-xxxxx subnet-yyyyy \

--security-group-ids sg-xxxxxRepeat this command for each VPC endpoint service listed for your region.

For detailed information on creating VPC endpoints, see Create an interface endpoint in the AWS documentation.

Step 3: Set up a private container registry

Since your cluster cannot access public container registries, copy all required Cast AI images to your private AWS ECR:

- Create a private ECR repository accessible from your cluster

- Copy Cast AI images from public repositories to your private ECR

- Ensure your EKS nodes have the necessary IAM permissions to pull images from your private ECR

NoteCast AI is developing a script to automate the image copying process. Contact support for the latest tooling.

For guidance on setting up ECR and configuring permissions, see Getting started with Amazon ECR in the AWS documentation.

Step 4: Connect your cluster

Generate the onboarding script from the Cast AI Console and run it to deploy the Cast AI agent:

- Navigate to the Cast AI Console and start the cluster connection process

- Generate and run the onboarding script as usual

- After the agent is deployed, update the deployment to use images from your private ECR:

kubectl set image deployment/castai-agent \

castai-agent=<your-ecr-url>/castai-agent:<version> \

-n castai-agentRepeat this step for all Cast AI components deployed in your cluster.

Maintenance

When upgrading Cast AI agents:

- Pull the new agent images from Cast AI's public registry

- Push them to your private ECR

- Update the deployments in your cluster to use the new image versions

Since Cast AI cannot directly access your private ECR, you must manage agent upgrades manually.

Additional Resources

- Cast AI PrivateLink AWS repository – Terraform examples and additional guidance

- AWS PrivateLink documentation

Updated 3 months ago