Business Continuity

Fallback options in case CAST AI is not operational

All cloud providers can occasionally go down, and SaaS companies using their services could become temporarily non-operational.

This article outlines how you can minimize the operational impact of your applications on the Kubernetes cluster if CAST AI can't provide services.

Risks

CAST AI manages Kubernetes cluster capacity automatically. Businesses rely on CAST AI to add necessary capacity during critical moments when scaling up, handling peaks, or spot droughts.

Kubernetes cluster is not cost-optimized

If CAST AI becomes unavailable during scale-down or quiet periods, it poses minimal to no risk to workload availability. It can only impact its cost efficiency.

Application pods can't start due to insufficient capacity

Workload Pods are Pending, usually due to an increase in the number of Pod Replicas through the HPA or KEDA mechanisms. It is expected that CAST AI will be able to add new Kubernetes Nodes in the next 60-120 seconds so that K8s Scheduler can schedule all Pending Pods.

Detection

Using an OpenMetrics monitoring solution like Prometheus is recommended to collect metrics from your Kubernetes cluster and scrape complimentary metrics from CAST AI SaaS. Additionally, it's also useful to set up notifications, for example, using Grafana Alerts.

Pending Pod age

You can use your own metrics from the Kubernetes API about Pending Pods' age.

It is recommended to set alerts if the pending period is 15 minutes or more. However, such notifications can add to alert noise, as misconfiguration on the workload manifest will leave the Pod in the Pending phase indefinitely.

kube_pod_status_phase{phase="Pending"}

Action: This alert should trigger further investigation, as it does not tell whether it's application misconfiguration or another issue.

K8s Cluster is not communicating with CAST AI

CAST AI's operations rely on the platform's components running in the subject Kubernetes cluster and network communication with https://api.cast.ai.

castai_autoscaler_agent_snapshots_received_total metric indicates that the CAST AI agent can deliver Kubernetes API changes ("snapshots") to CAST AI SaaS and get a delivery confirmation. If this metric drops to 0, the CAST AI agent is not running, or something else prevents the snapshot delivery.

Action: Check if the CAST AI agent is running in the castai-agent namespace. If it's not, describe the pod, and check its logs. It can be due to a network/firewall issue or another reason why CAST AI is unreachable over the internet.

CAST AI receives data from the agent, but it doesn't process the snapshot

If castai_autoscaler_agent_snapshots_received_total is not 0, but castai_autoscaler_agent_snapshots_processed_total is 0, it's 100% CAST AI issue.

CAST AI team should be aware of this, but you can raise an incident within the CAST AI console or report it on Slack.

Cluster Status in CAST AI console is not Healthy

CAST AI requires the agent and the cluster controller to always run to add new Nodes. There might be various issues, from cloud credentials no longer being valid to invalidated API tokens, etc.

Use the Notification Webhook functionality to get notified about a failed or warning cluster status.

Fallback scaling

Adding the necessary capacity to the Kubernetes cluster to make sure all critical applications are running (not pending) is a top priority. This emergency capacity can be added manually or triggered automatically on time delay.

Manual

In GKE or AKS, the cluster autoscaler is integrated into a node pool group. During the CAST AI onboarding, the cluster autoscaler is usually disabled in the native node pool. In an emergency, you can enable it again in GCP and Azure portals in a web browser.

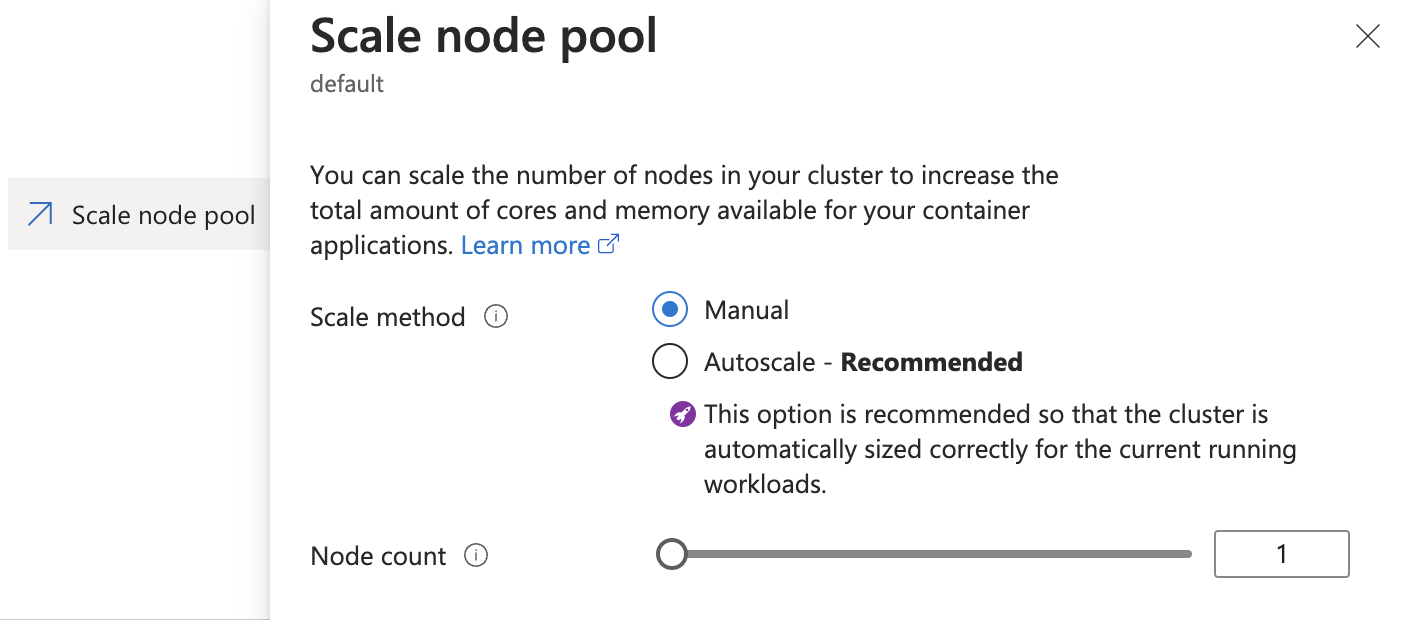

AKS node pool "Scale" configuration to "Autoscale".

To enable the cluster autoscaler on the default node pool using az cli, use the following command:

az aks nodepool update --enable-cluster-autoscaler -g MyResourceGroup -n default --cluster-name MyCluster

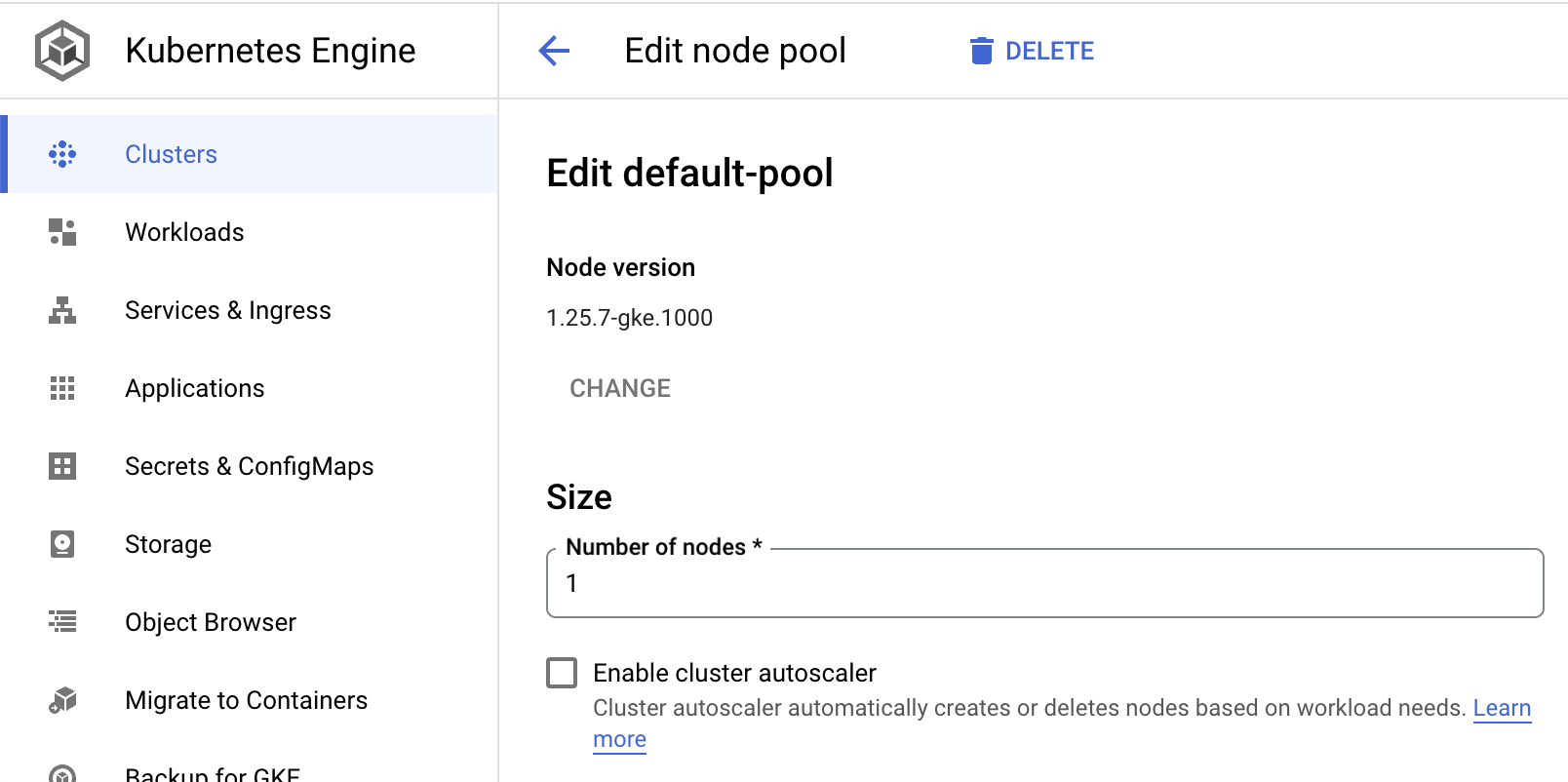

GKE default node pool configuration "Enable cluster autoscaler".

In EKS, the autoscaler usually runs inside the cluster, and with CAST AI is scaled to 0 replicas. To manually fall back autoscaling, scale the cluster autoscaler replica to 1.

Enabling the cluster autoscaler again will reinflate the Auto Scaling Group/node pool based on Pendings Pods.

Fallback when Node Templates are in use

Some workloads will have placement logic that uses NodeAffinity or NodeSelector to require nodes with specific labels, such as "mycompany=spark-app" or "pool=gpu."

To satisfy these placement requirements, users set up Node Templates. However, if you need to fall back to node pools or Auto Scaling groups, you need to configure labels similar to those used on the Node Templates. As in the above scenario, the cluster autoscaler should be enabled for these node pools or ASGs.

Automatic

Automatic fallback logic assumes that the cluster autoscaler is always on, but it works with a delay. This setting prioritizes the Nodes CAST AI creates, but if the platform becomes unable to add capacity, the cluster autoscaler resumes its Node creation abilities.

Each Node Template would have a matching fallback ASG created to align with corresponding Node Template selectors as described in the "Fallback when Node Templates are in use" section above.

Modifying or setting up a fallback autoscaler

Add extra flags to the container command section:

--new-pod-scale-up-delay=600s – to configure a global delay.

If setting up a secondary cluster autoscaler:

--leader-elect-resource-name=cluster-autoscaler-fallback – lease name to avoid duplicating the legacy autoscaler.

Make sure the cluster role used by CAST AI's RBAC permissions allows the creation of a new lease:

- apiGroups:

- coordination.k8s.io

resourceNames:

- cluster-autoscaler

- cluster-autoscaler-fallback

resources:

- leases

verbs:

- get

- update

Example of a fallback autoscaler configuration:

spec:

containers:

- command:

- ./cluster-autoscaler

- --v=4

- --leader-elect-resource-name=cluster-autoscaler-fallback

- --new-pod-scale-up-delay=600s

- --stderrthreshold=info

- --cloud-provider=aws

- --skip-nodes-with-local-storage=false

- --expander=least-waste

- --node-group-auto-discovery=asg:tag=k8s.io/cluster-autoscaler/enabled,k8s.io/cluster-autoscaler/v1

image: registry.k8s.io/autoscaling/cluster-autoscaler:v1.22.2

Spot Drought Testing

You can use our spot drought tool and runbook if you would like to understand how your workloads will behave during a mass spot interrupt event, or if you would like to understand how the autoscaler will respond to a lack of spot inventory.

Spot Drought Tool

The spot drought tool required to run this test is available in our github at the following link:

Spot Drought Runbook:

Objective:

To assess the performance of applications on a test cluster during a simulated drought of spot instances.

Prerequisites:

Test Cluster: A cluster with a significant scale and real workloads running on spot instances. This will be used for the simulation.

Python Environment: Ensure you have Python installed along with the requests library.

API Key: Make sure you have a full access CAST AI API key ready. This can be created in the CAST AI console.

Organization ID: Your CAST AI Organization ID.

Cluster ID: The cluster ID for the target cluster.

Script: The CAST AI Spot Drought Tool provided above.

Steps:

Preparing for the Simulation:

- Backup: Ensure you have taken necessary backups of your cluster configurations and any important data. This is crucial in case you need to roll back to a previous state.

- Set Up the Script:

- Open the provided Python script in your terminal or preferred IDE.

- Create a CASTAI_API_KEY environment variable in your terminal, or update the castai_api_key variable with your CAST AI API key.

- Update the organization_id variable with your CAST AI organization id.

- Update the cluster_id variable with the id of the target cluster.

Minimize Spot Instances Availability:

- Run the Python script in interactive mode:

python castai_spot_drought.py

- You should see a list of options to choose from.

- Choose 1: Add to blacklist to restrict the spot instances available to your test cluster

- Choose 2: Get blacklist to retrieve the blacklist and confirm that all instance families were added to the blacklist

Run the Interrupt Simulation:

- Using the Node List in the CAST AI console, identify a set of spot nodes (25% or more of the nodes in the cluster) and simulate an interruption using the three-dot menu on each node’s row.

- Ensure that the interruption takes place and monitor the immediate effects on the cluster.

- The response from the CAST AI autoscaler will depend on what policies are enabled, and what features are selected in your node templates.

- We recommend performing this test with the Spot Fallback feature enabled in your node templates.

- Measure and Test Outcomes:

- Service Downtime: Monitor the services running on your cluster. Check if any service goes down or becomes unresponsive during the simulation.

- Error Responses: Track error rates and types. Note down any spikes in errors or any new types of errors that appear during the simulation.

- Latency: Measure the response time of your applications. Compare the latency during the simulation with the usual latency figures to see any significant differences.

- Other Metrics: Based on your application and infrastructure, you might want to monitor other metrics like CPU usage, memory usage, network bandwidth, etc.

Rollback:

Once you have captured all the necessary data and metrics:

- Return to your terminal, or run the script again in interactive mode:

python castai_spot_drought.py

- Choose 3: Remove from blacklist to lift the restrictions and bring the cluster back to its normal state.

- Monitor the cluster to ensure it's back to normal operation and all the spot instances are available again.

Conclusion:

After you've completed the simulation, review the captured data and metrics. This will give you insights into how your applications and infrastructure behave under spot instance drought conditions. Use this information to make any necessary adjustments to your setup for better fault-tolerance and performance.

Updated 10 months ago